This page expands on each of these points in detail below

LiveKit delivers secure, low-latency audio and video streams while leaving AI processing, reasoning, and storage to external systems.

LiveKit handles real-time media transport, session management, and encryption, but does not perform speech recognition, language understanding, or business logic.

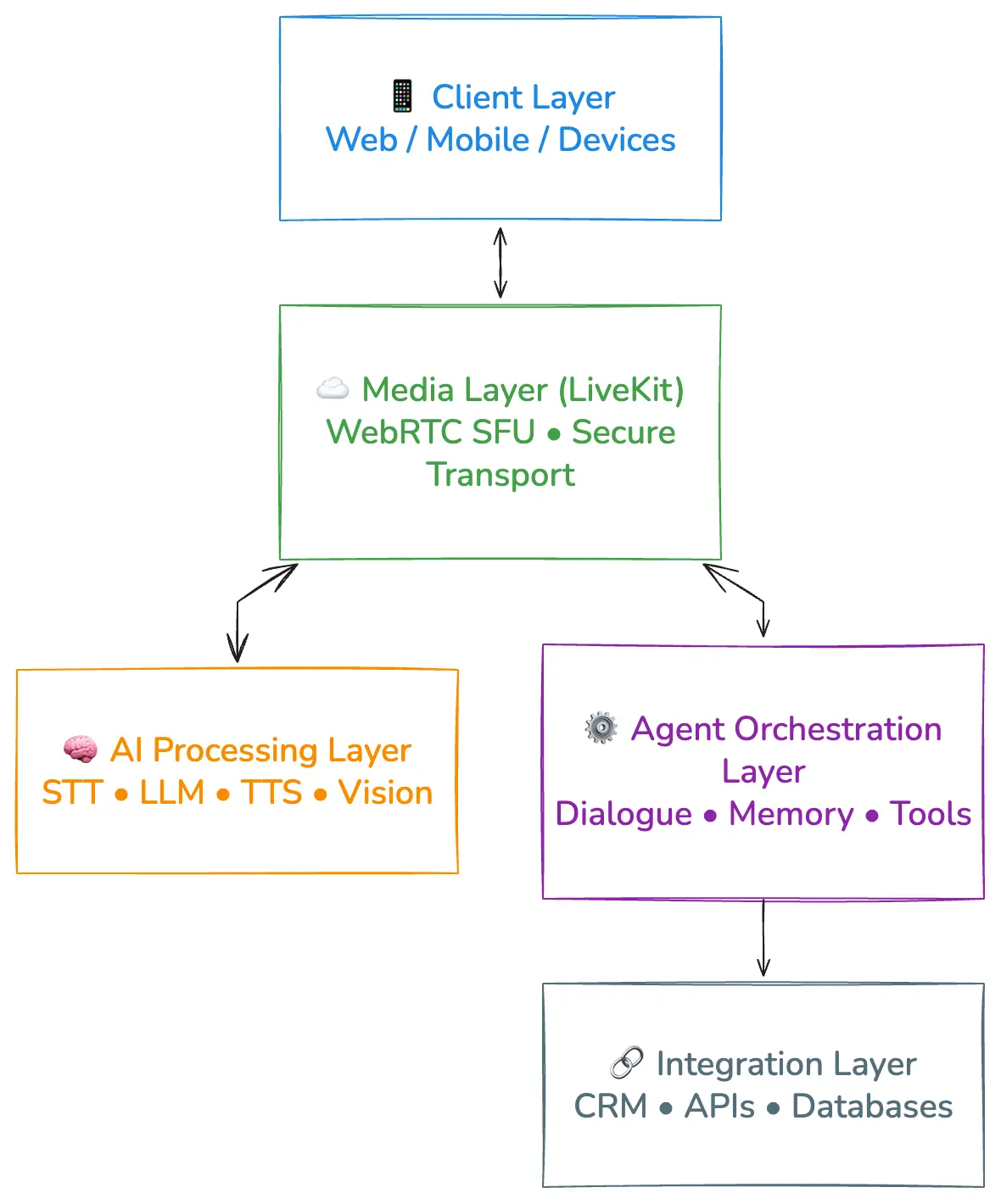

At a high level, a typical WebRTC production architecture includes:

This distinction is essential for architecture, scaling, and compliance.

LiveKit is media infrastructure. AI services plug into it, but run elsewhere.

A LiveKit-based AI agent system separates media transport, AI processing, orchestration, and integration layers for scalable and flexible real-time interactions.

AI agents built with LiveKit are systems where users communicate through live voice or video, and AI responds in real time. The agent may answer questions, perform tasks, guide users, or operate as a digital assistant.

LiveKit does not make the system intelligent. It enables real-time communication between users and AI processing services. A typical real-time AI agent system has several layers working together.

Browsers, mobile apps, kiosks, or devices capture microphone and camera input and play back AI audio or video.

Handles secure, real-time transport of audio and video streams.

Runs speech recognition (STT), language models (LLMs), text-to-speech (TTS), and vision models.

Manages conversation flow, turn-taking, interruptions, memory access, and tool usage.

Connects the agent to CRMs, databases, APIs, and internal systems.

LiveKit stays focused on the media layer. Everything related to understanding, reasoning, or storing data lives outside it.

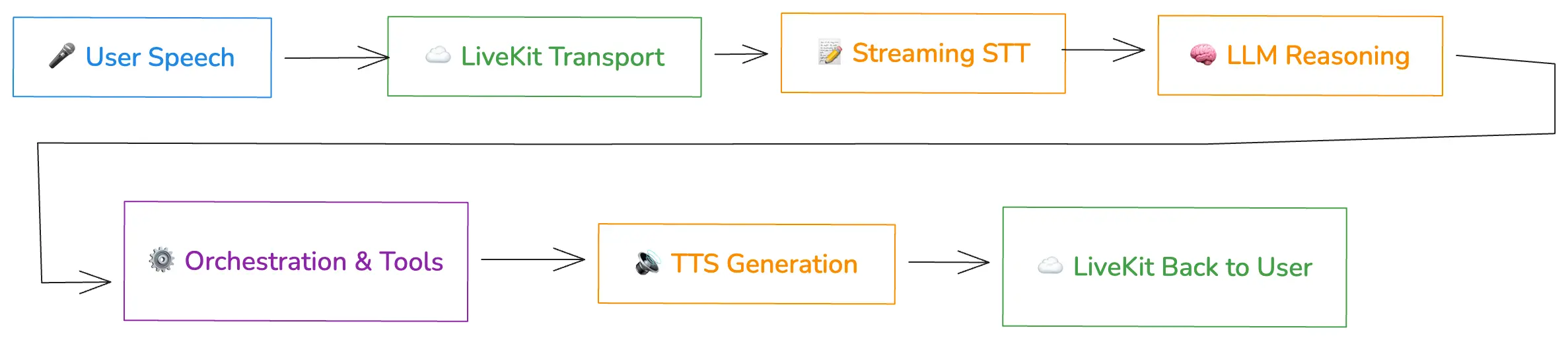

User speech travels through LiveKit to AI services for transcription, reasoning, and synthesis, then returns to the user as real-time audio.

When someone speaks to an AI agent, several things happen very quickly behind the scenes.

LiveKit carries the sound both ways. It never decides what the words mean.

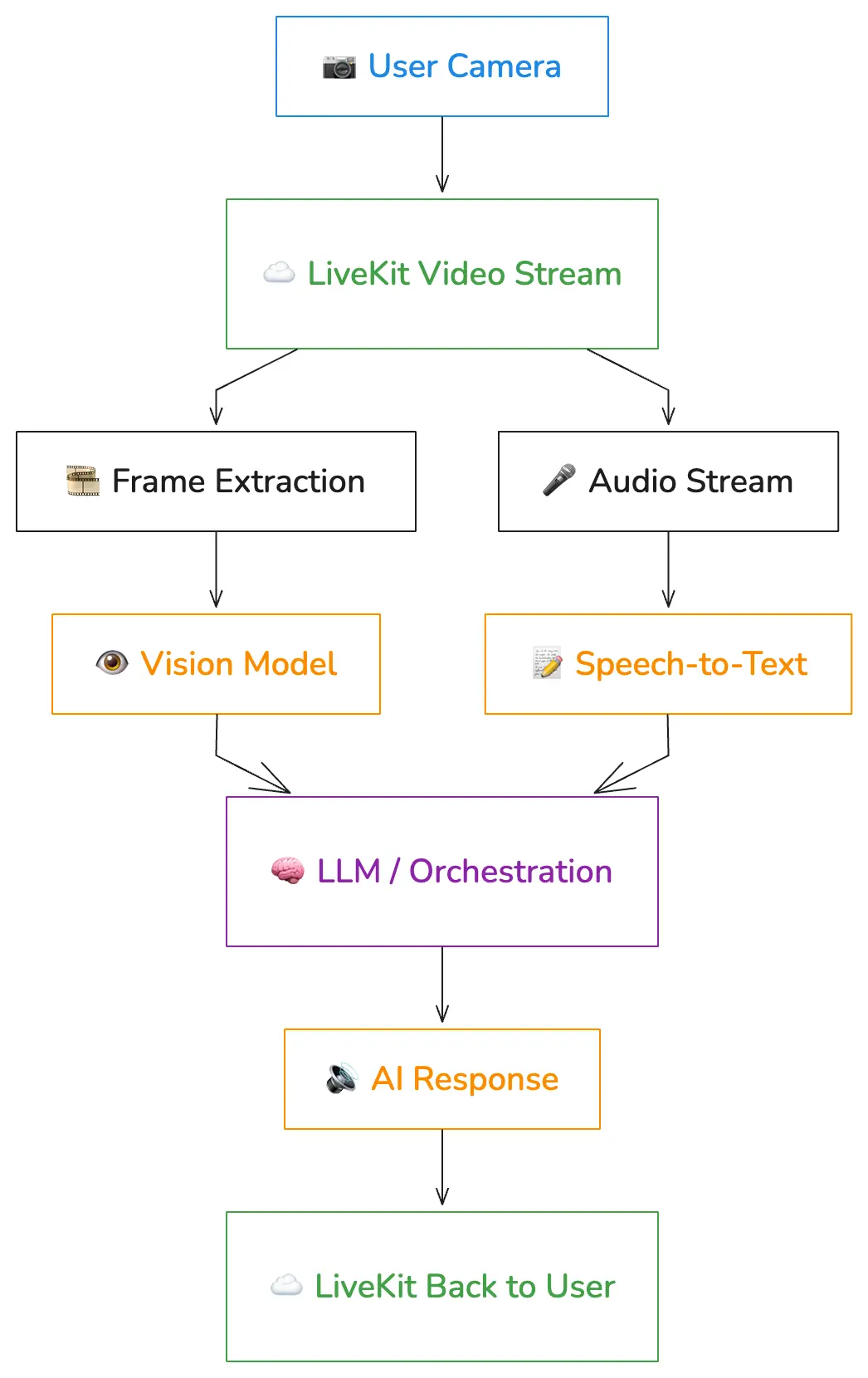

LiveKit can carry live video streams to vision models, allowing AI agents to analyze visual input while maintaining fast, secure transport.

Machine learning for STT, LLM reasoning, TTS, and vision inference runs externally, while LiveKit focuses solely on delivering media streams reliably.

Media delivery and machine learning live in different worlds.

LiveKit focuses on moving packets in real time, keeping streams stable even when networks are messy.

Machine learning systems focus on turning raw audio and video into text, meaning, and responses. These systems usually run on specialized hardware and scale based on inference demand, not on bandwidth.

Speech recognition, language reasoning, speech synthesis, and vision inference all happen in external AI services.

This separation lets teams upgrade models, change providers, or adjust compute resources without touching the media layer.

Low-latency transport, streaming transcription, and fast TTS are key to making AI conversations feel natural and responsive.

People notice delays fast. Even a second of silence can feel awkward in conversation. Real-time AI systems need to keep total round-trip delay low enough to feel responsive.

Several parts add to latency. Network transport adds a small delay, which LiveKit minimizes with efficient routing and adaptive streaming.

Speech recognition takes time to process audio, but streaming models can start returning words quickly. Language models may take longer, especially for complex reasoning, though streaming responses help reduce perceived wait time. Text-to-speech adds another short delay before playback starts.

LiveKit reduces network and media latency, not model inference time.

AI agents use voice activity detection and streaming transcripts to handle interruptions and enable smooth conversational turn-taking.

Talking over each other is natural in human conversation. AI agents need to handle that too.

Voice activity detection helps determine when a user starts and stops speaking. Silence thresholds signal when to begin processing speech. Streaming transcripts allow the system to anticipate when a sentence is about to end.

If the AI is speaking and the user interrupts, the orchestration layer stops audio playback and shifts back into listening mode. These behaviors are controlled in the agent logic, not in LiveKit itself.

Conversation memory and user context are stored externally, letting AI agents personalize responses without relying on LiveKit for storage.

Many AI agents remember things, at least for a while. That might include conversation summaries, user preferences, open tasks, or previous answers.

This information is stored in external databases or vector stores. These systems decide how long data is kept, how it is secured, and how it can be retrieved later.

LiveKit does not store conversation history by default. It simply moves live media.

LiveKit encrypts audio and video in transit while authentication and storage security are managed by external systems.

LiveKit provides secure transport, but GDPR, HIPAA, and other compliance responsibilities depend on the AI system and application layer.

Audio and video streams are encrypted in transit using DTLS for key exchange and SRTP for media encryption. Only authorized participants can join sessions using secure tokens and signaling controls.

AI services and backend systems use their own security layers, such as API authentication, network isolation, and encrypted storage. Media security and data security are related but handled in different parts of the system.

Real-time streaming and AI data processing fall under different compliance responsibilities.

LiveKit provides secure transport but does not decide whether audio or video is recorded, how transcripts are stored, or how long data is retained. Those decisions belong to the application and AI layers.

Requirements like GDPR, HIPAA, or SOC 2 depend on how the full system handles personal data. Clear boundaries between transport and processing make it easier to define who is responsible for what.

Scaling LiveKit nodes and AI compute separately ensures real-time agents can handle many users and high workloads efficiently.

Scaling happens in two directions at once.

On the media side, more users mean more concurrent streams. LiveKit nodes scale horizontally to handle more rooms, participants, and bandwidth.

On the AI side, more conversations mean more speech recognition, more LLM requests, and more speech synthesis. These workloads scale based on compute resources, often involving GPUs.

Because these layers are separate, a spike in AI demand does not directly overload media routing, and vice versa.

Redundant media servers, autoscaling AI services, and failover mechanisms ensure continuous operation of real-time AI agents.

LiveKit can be deployed in cloud-hosted, self-hosted, or hybrid configurations to meet performance, control, or regulatory requirements.

Real-time systems must keep running even when parts fail.

Media servers can run in multiple regions with failover options. If a node drops, clients reconnect to another one. AI services use autoscaling, load balancing, and retry logic. Conversation state can be stored so a session can continue even if a single service restarts.

Reliability is a shared effort across media infrastructure, AI services, and orchestration logic.

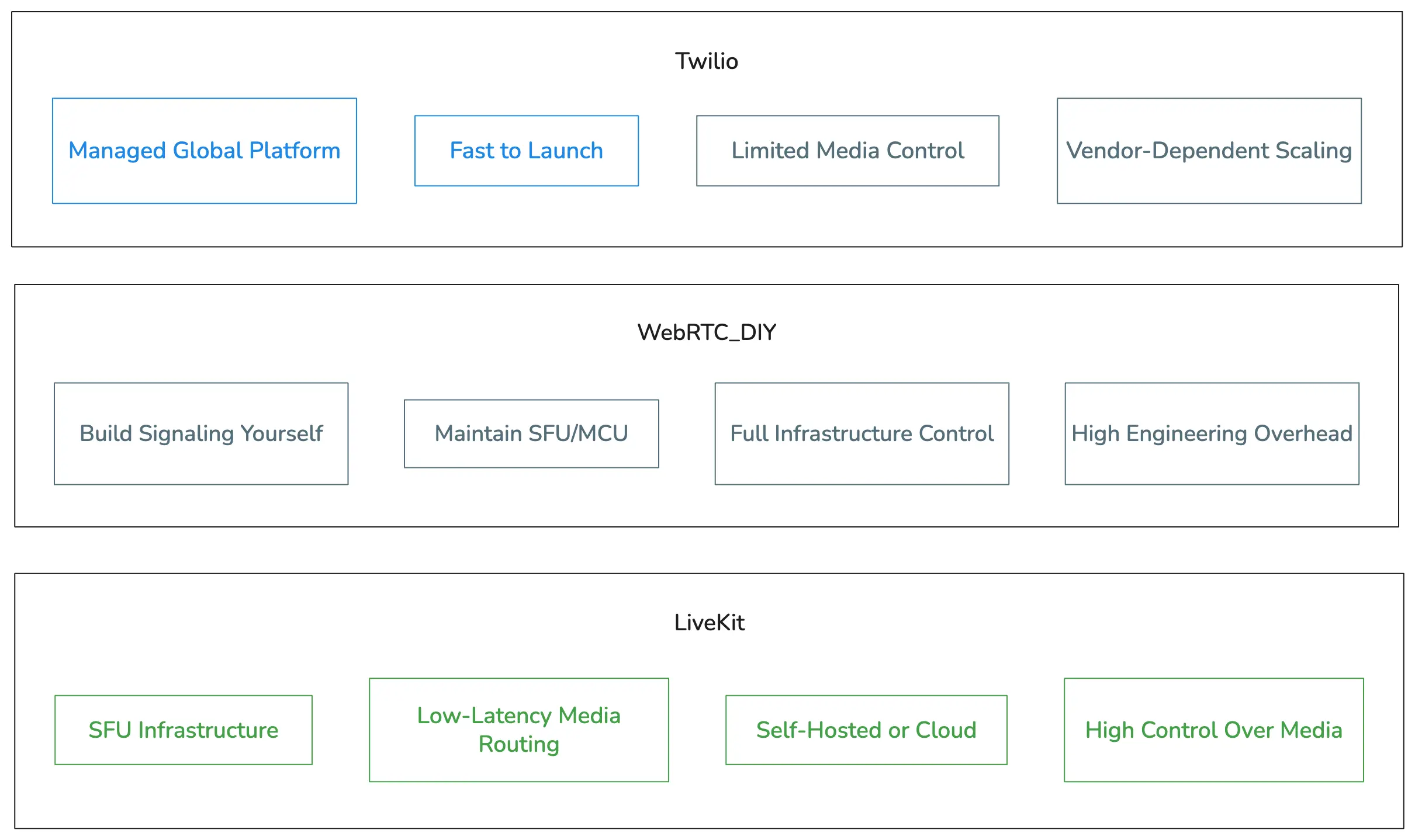

LiveKit offers flexible SFU infrastructure, WebRTC DIY requires full-stack expertise, and Twilio provides a managed, low-control solution.

There are several ways to build real-time communication for AI agents.

LiveKit provides an SFU-based infrastructure that developers can control and integrate deeply with custom AI pipelines. It supports self-hosted or managed deployment and gives flexibility over routing, scaling, and media handling.

WebRTC DIY means building everything from scratch: signaling servers, NAT traversal, SFUs or MCUs, monitoring, scaling logic, and failover. This offers full control but requires deep real-time networking expertise and long-term maintenance effort.

Twilio Programmable Voice or Video offers a managed communications platform with global infrastructure. It reduces operational overhead but gives less low-level control over media routing and may be less flexible for tightly integrated, high-performance AI streaming pipelines.

LiveKit often fits teams that want strong control over real-time media behavior without maintaining the entire WebRTC stack themselves.

Real-time AI agents using LiveKit power voice support, sales assistance, tutoring, healthcare guidance, and smart device control.

Real-time AI agents appear in many forms.

All of these rely on the same pattern: LiveKit moves media in real time, and external AI systems provide understanding and intelligence.

LiveKit handles media transport but does not provide AI models, memory, workflow automation, or compliance guarantees.

LiveKit is not:

Fast media transport does not fix slow AI models. If speech recognition or language reasoning takes too long, conversations will still feel delayed.

LiveKit also does not provide built-in conversation design, workflow automation, or long-term memory. Those pieces must be built as part of the broader AI system.

Knowing these limits helps set realistic expectations and leads to better architectural decisions.

LiveKit provides the real-time audio and video transport layer for AI agents.

Speech recognition, reasoning, synthesis, memory, and compliance controls operate in external AI and application services.

Keeping media infrastructure separate from AI processing makes real-time systems more flexible, scalable, and responsive.

Startup 💡

Perfect for MVPs: fast launch, core features, and a foundation to test your idea.

~$8,000

from 4-5 weeks

Growth 🚀

Ideal for scaling products: advanced functionality, integrations, and performance tuning.

~$25,000

from 2-3 months

Enterprise 🏢

Built for mission-critical systems: heavy traffic, complex infrastructure, and robust security.

~$50,000

from 3-5 months

We build scalable WebRTC systems with media servers, edge routing, monitoring, and failure handling – no P2P illusions.

We start with architecture diagrams, data flow, and system boundaries before writing code. Clients see how signaling, media, storage, and ML fit together.

Latency budgets are defined upfront. We know where milliseconds are lost and how to control them across clients, networks, and media servers.

We design, customize, and scale SFU architectures for multi-party calls, streaming, recording, and AI-assisted flows.

Encryption, recording, retention, and access control are part of the core design. GDPR, HIPAA, and enterprise requirements are handled at the system level.

We plan for packet loss, reconnects, region outages, and partial service degradation. WebRTC systems must fail gracefully.

Get the scoop on real-time video/audio, latency & scalability – straight talk from the top devs

No. It transports live audio and video streams.

In external AI services connected to the audio stream.

Yes, but recording and storage are handled outside the media transport layer.

No. Vision processing happens in separate machine learning systems.

Yes. Media transport and AI inference are independent layers.

Speech recognition time, language model inference, and text-to-speech synthesis usually add more delay than media transport.