This page expands on each of these points in detail below

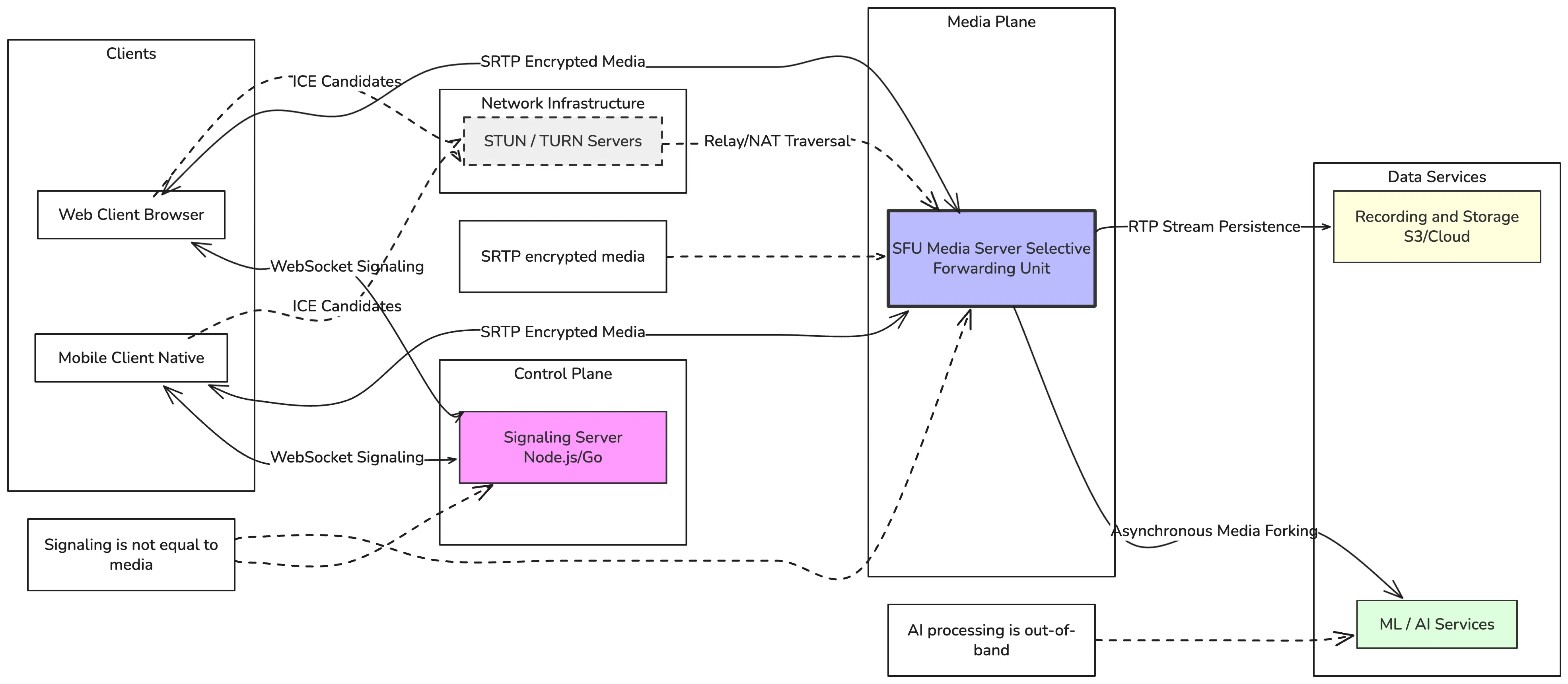

A production WebRTC system is a layered, real-time system where every component has a narrow responsibility and every millisecond matters.

At a high level, a typical WebRTC production architecture includes:

Why this matters: In production, WebRTC is not peer-to-peer in the pure sense. Media servers, edge routing, and monitoring are essential for stability, scalability, and compliance

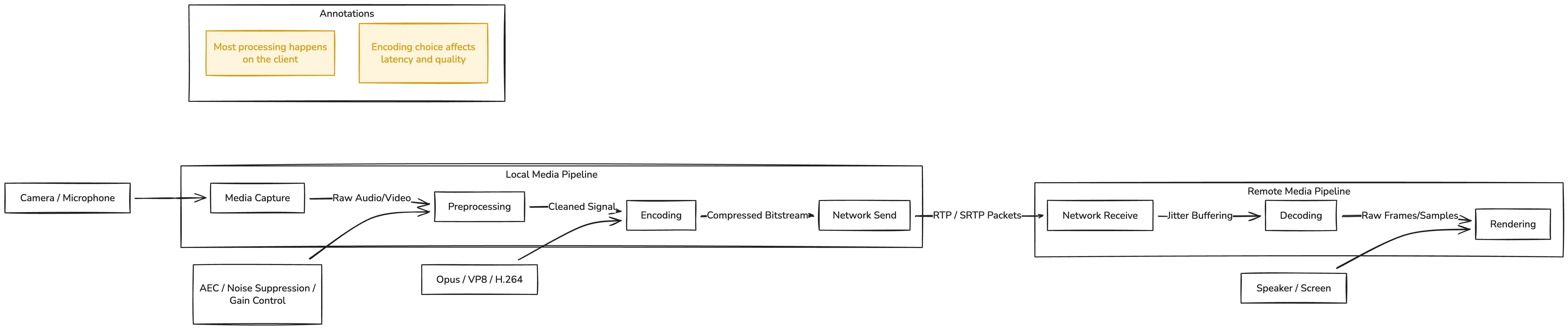

The client is the user’s browser, mobile app, or desktop application. It is responsible for handling media in real time with minimal latency.

Key responsibilities of the WebRTC client:

Client-side processing is where user experience is won or lost. Dropped frames, audio glitches, or camera freezes often start here.

Running features like background blur or noise suppression on the client reduces server load and avoids extra network hops. In real-time systems, saving even 20 ms per frame matters.

Signaling servers exist to answer one question: how do two endpoints connect? They do not carry audio or video.

Instead, they:

Common signaling technologies include WebSocket, HTTP APIs, and SIP-based systems.

.webp)

Stateless signaling servers are easier to scale and recover. Store session state in external systems if needed, not in memory.

Forwards streams without decoding • Very low processing overhead • Low latency • Best choice for most multi-party calls

When to use an SFU:

When to use an SFU:

Decodes and mixes multiple streams • Produces a single output stream • Higher CPU usage and latency • Useful for legacy endpoints or centralized recording

When to use an MCU:

When not to use an MCU:

MCUs simplify client logic but shift complexity and cost to the server. SFUs scale better and preserve quality.

Media servers are what make WebRTC work at scale.

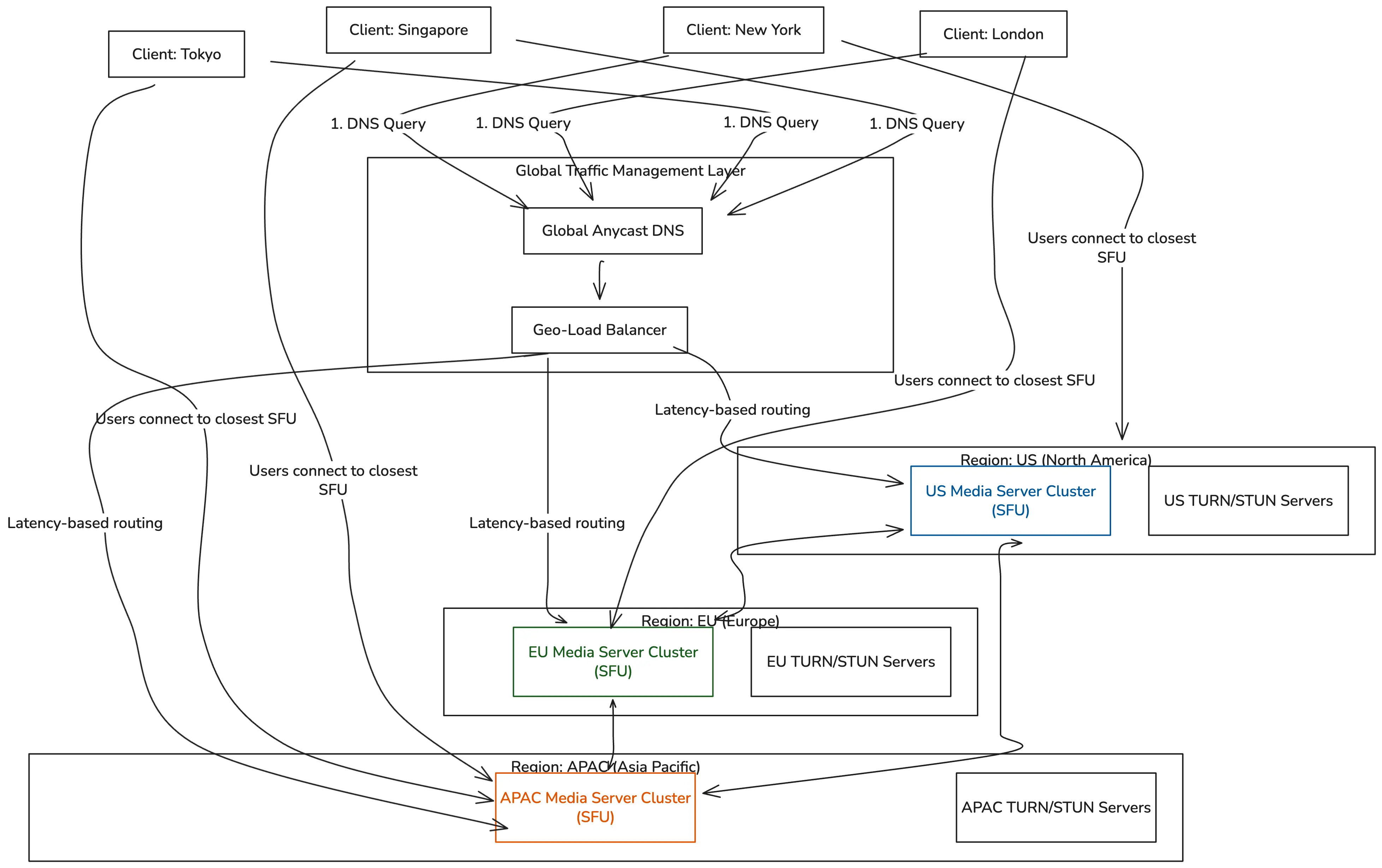

Latency is often dominated by the network, not the codec.The edge layer exists to:

Users can feel latency long before they can describe it. Aim for physical proximity first, codec tuning second.

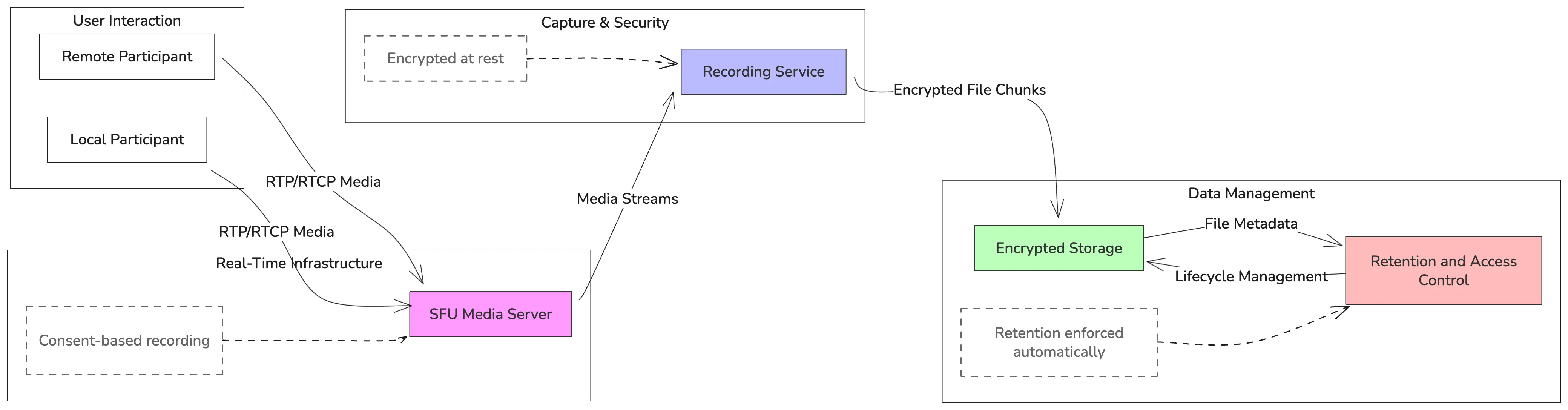

Recording is common in production systems, even when users rarely replay calls.

Recording pipelines usually include:

Design recording systems for legal and audit needs before analytics or UX features.

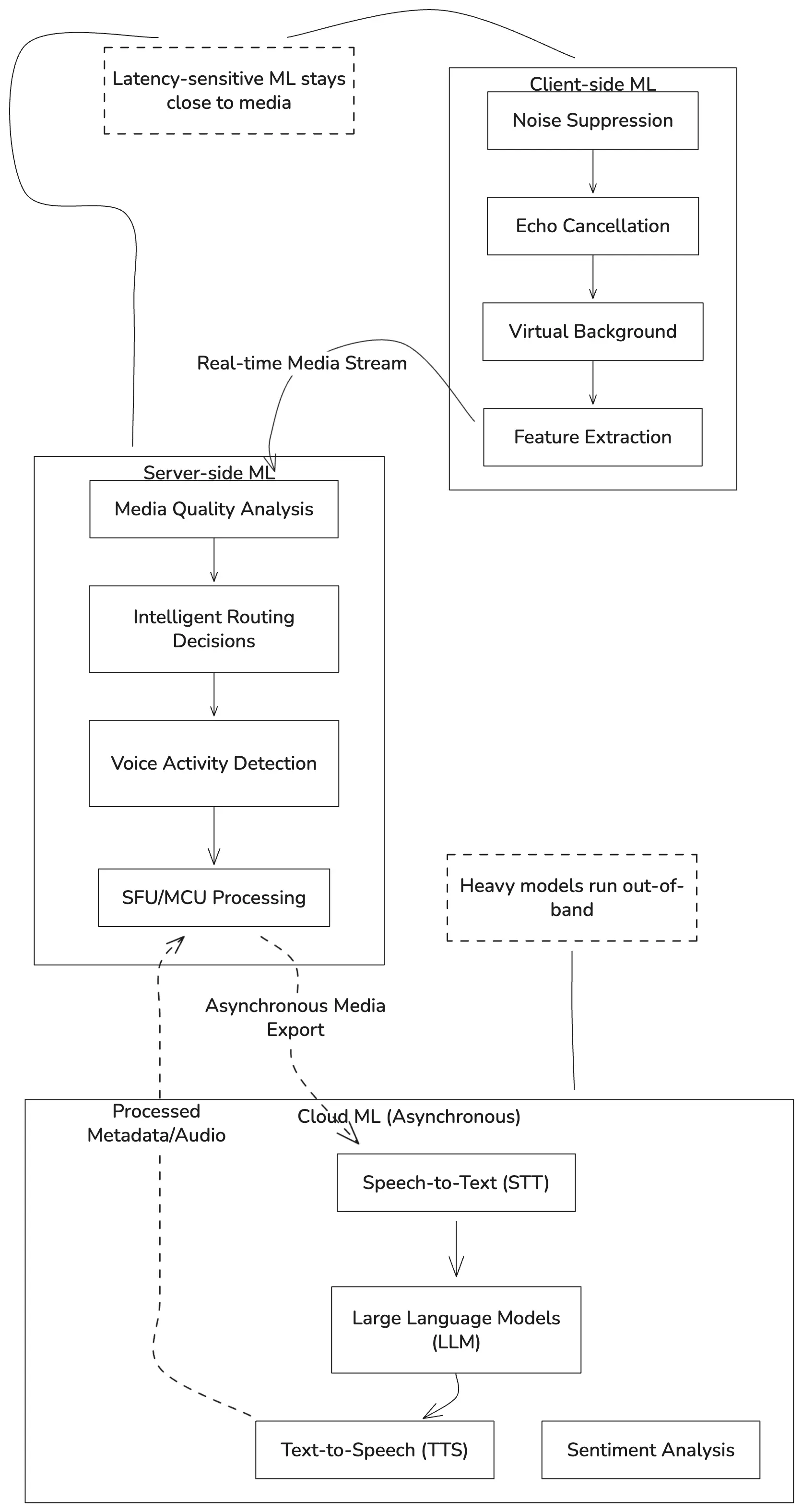

Machine learning adds intelligence to real-time media systems, but it also adds latency and cost.

Common ML placements:

When to use ML in the real-time path:

When not to use ML in the real-time path:

If a feature does not need immediate feedback, move it out of the live path.

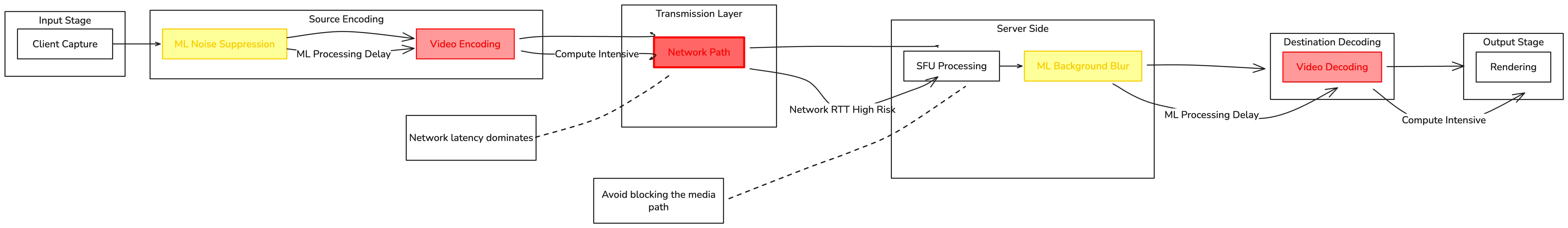

A single video frame travels through multiple stages in a production WebRTC system:

Typical latency ranges:

End-to-end latency usually lands between 50 and 300 ms.

Synthetic calls and real-user monitoring catch issues faster than logs alone.

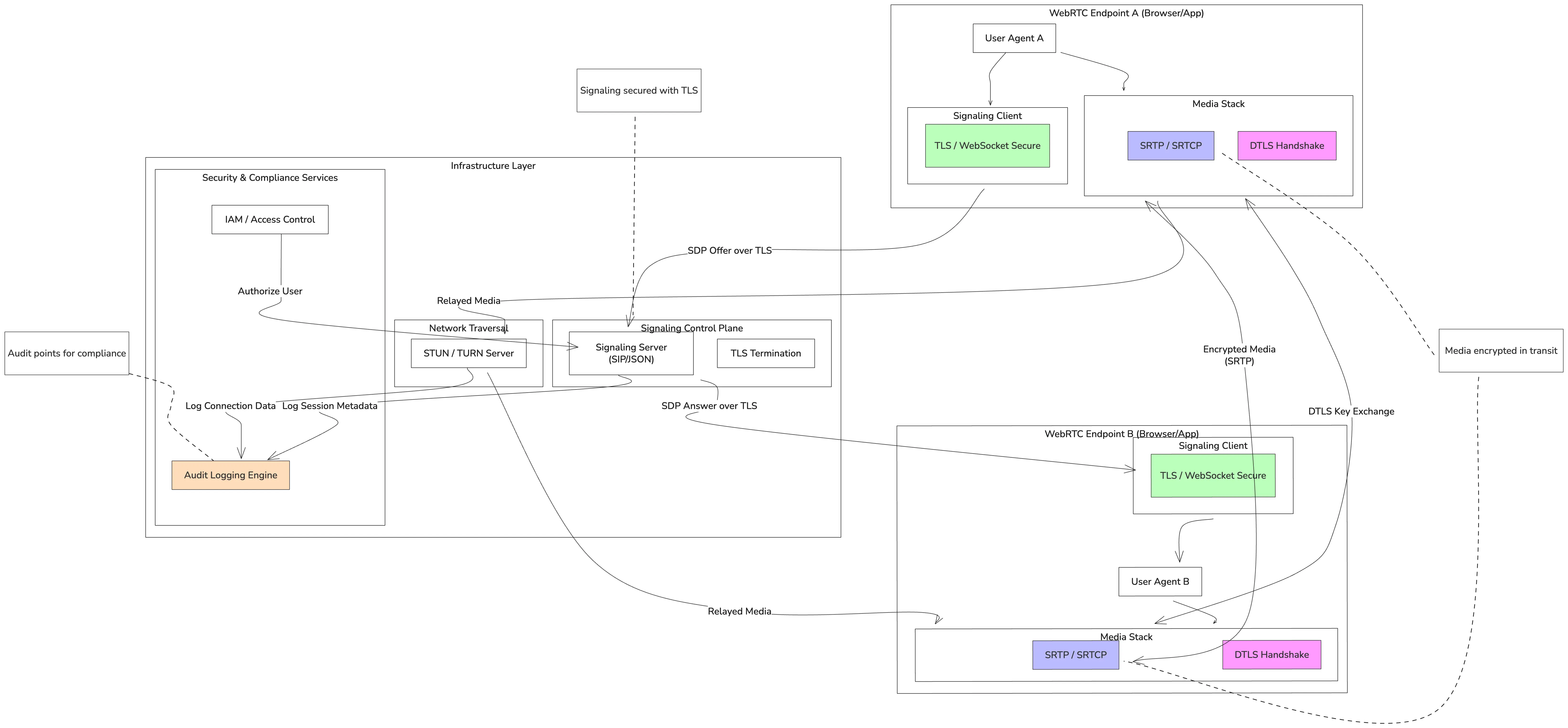

Production WebRTC systems handle sensitive personal data.

Core security and compliance elements include:

Industries may require GDPR, HIPAA, or PCI-DSS compliance.

If compliance is added later, it will be expensive and incomplete.

A single video frame travels through multiple stages in a production WebRTC system:

Simulate packet loss, region outages, and client crashes. Production will.

Even well-designed WebRTC architectures fail in predictable ways. Knowing these failure modes helps prevent outages and degraded user experience.

Common issues include:

Why these failures happen:

When issues appear, look at architecture and traffic patterns first—not just code.

Startup 💡

Perfect for MVPs: fast launch, core features, and a foundation to test your idea.

~$8,000

from 4-5 weeks

Growth 🚀

Ideal for scaling products: advanced functionality, integrations, and performance tuning.

~$25,000

from 2-3 months

Enterprise 🏢

Built for mission-critical systems: heavy traffic, complex infrastructure, and robust security.

~$50,000

from 3-5 months

We build scalable WebRTC systems with media servers, edge routing, monitoring, and failure handling – no P2P illusions.

We start with architecture diagrams, data flow, and system boundaries before writing code. Clients see how signaling, media, storage, and ML fit together.

Latency budgets are defined upfront. We know where milliseconds are lost and how to control them across clients, networks, and media servers.

We design, customize, and scale SFU architectures for multi-party calls, streaming, recording, and AI-assisted flows.

Encryption, recording, retention, and access control are part of the core design. GDPR, HIPAA, and enterprise requirements are handled at the system level.

We plan for packet loss, reconnects, region outages, and partial service degradation. WebRTC systems must fail gracefully.

Get the scoop on real-time video/audio, latency & scalability – straight talk from the top devs

WebRTC is used to build real-time audio and video features such as video calls, voice calls, live streaming, and interactive collaboration tools.

No. Most production systems rely on media servers (SFU or MCU), edge routing, and monitoring to scale reliably.

In most cases, SFU-based architectures are the default choice due to lower latency, better scalability, and cost efficiency.

By optimizing client processing, placing media servers close to users, tuning codecs, and avoiding unnecessary server-side processing.

Yes, if encryption, recording, data access, and retention policies are designed into the architecture from the start.

Yes. We design recording pipelines and analytics flows that integrate cleanly with media servers and storage systems.

Poor architecture decisions, underestimated network variability, lack of monitoring, and ignoring failure scenarios.