By 2025, AI video processing is making big changes in many industries like entertainment and transportation. It automates tasks such as object detection and scene recognition, which boosts user engagement and operational efficiency. Companies like Netflix use AI to personalize content and improve user experience. AI also helps in creating virtual actors and improves video quality through techniques like Super Resolution.

These advancements are driven by faster processing speeds with GPUs and edge computing, reducing latency for real-time applications. Developers use frameworks like TensorFlow and PyTorch to build these AI solutions, focusing on speed and real-time capabilities. The benefits include automated video editing, personalized content, and cost savings. This trend is set to grow, with more industries adopting AI for various applications, offering a glimpse into future possibilities.

Current State of AI Video Processing

The shift from traditional to AI-enabled video processing is clear, with machines now handling tasks like object detection and scene recognition.

Real-world applications, such as self-driving cars and automated sports highlights, show AI's potential.

Success stories from companies like Netflix, using AI to personalize thumbnails, demonstrate the strength of this technology in enhancing user engagement. This is further supported by recent findings showing that AI-powered adaptive streaming technology can enhance user experience through real-time adjustments based on viewer behavior (Khan, 2023).

Why Trust Our AI Video Processing Expertise?

At Fora Soft, we've been at the forefront of AI-powered multimedia solutions for over 19 years, specializing in video surveillance, e-learning, and telemedicine applications. Our team's deep expertise in WebRTC, LiveKit, and advanced multimedia technologies has enabled us to successfully implement AI recognition, generation, and recommendation systems across numerous enterprise-level projects. With a rigorous selection process where only 1 in 50 developers makes the cut, we maintain the highest standards in AI video processing implementation.

Our track record speaks for itself - we've achieved a 100% project success rating on Upwork, demonstrating our ability to deliver reliable AI video processing solutions. We've helped businesses across various industries integrate AI into their video platforms, from implementing adaptive bitrate streaming to developing sophisticated content recommendation systems. This hands-on experience gives us unique insights into the evolving landscape of AI video processing and its practical applications.

Evolution From Traditional to AI-Powered Processing

The market for AI video processing is growing quickly as more businesses start using it, with projections showing a compound annual growth rate of 20% from 2023 to 2030 (Andrade et al., 2023). Leading this growth are industries like entertainment, security, and healthcare.

These industries are finding new ways to use AI for tasks such as content creation, surveillance, and medical imaging.

They are investing heavily in AI to make video processing faster, smarter, and more efficient for their users.

Market Growth and Enterprise Adoption Rates

Although traditional video processing methods are still in use, AI-powered processing is swiftly taking over the market. The market growth and enterprise adoption rates are rapidly increasing, with businesses seeking better video quality, efficiency, and user experiences. A growth comparison is illustrated below:

Developers are integrating AI for tasks like object detection and real-time analytics, enhancing functionalities and user satisfaction. Demand for AI tools in video processing is skyrocketing due to their accuracy and speed.

Key Industries Leading Innovation

With AI video processing on the rise, several industries are leading the way in innovating and adopting these advanced technologies. Retail is enhancing customer experiences with video analytics, while healthcare is improving remote patient monitoring.

Media and entertainment enterprises are using AI for personalized content delivery. Companies are integrating AI into their enterprise video platforms for better security and efficiency.

Manufacturing is utilizing AI for predictive maintenance and quality control. Transportation is enhancing safety and traffic management through AI-driven video analysis.

Real-World Applications and Success Stories

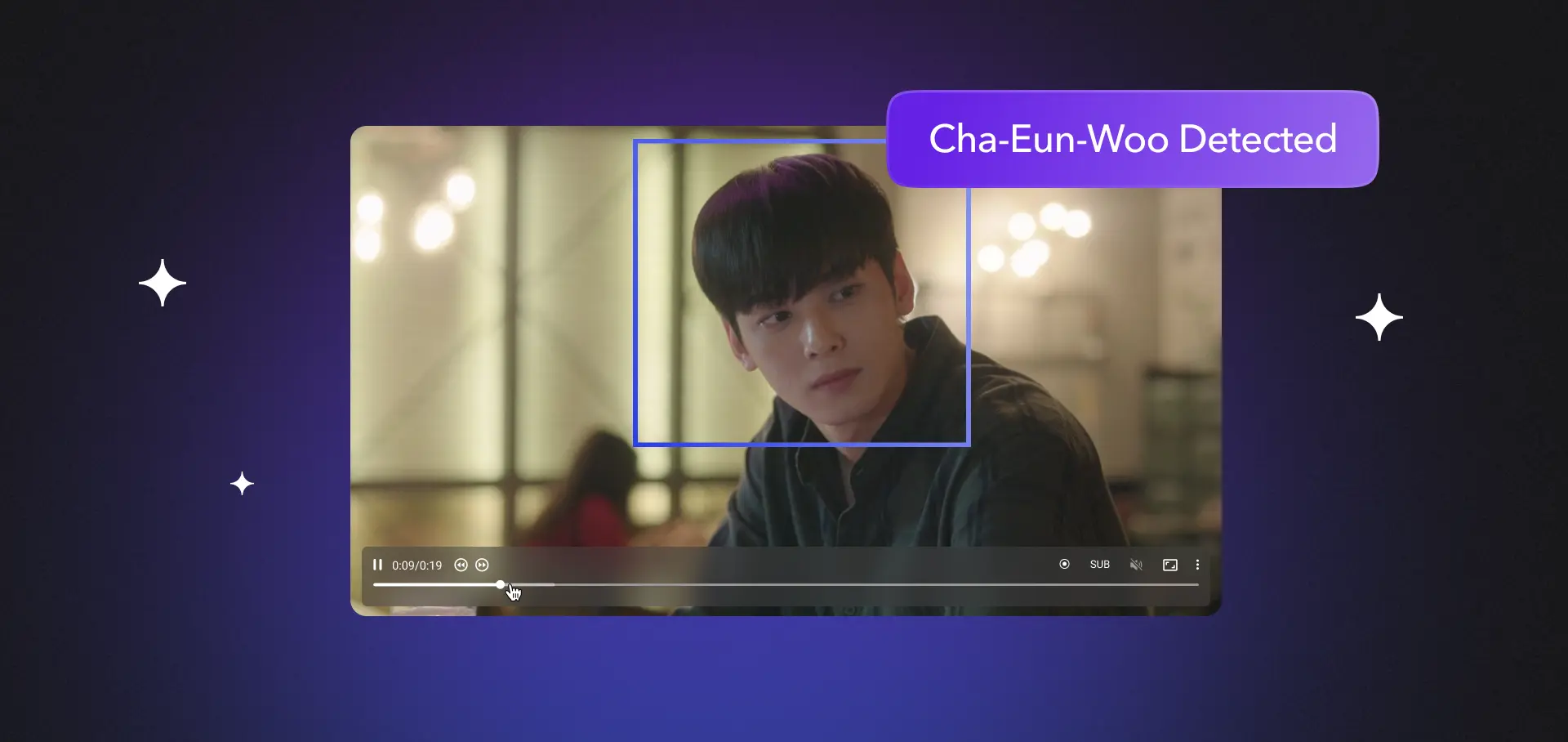

AI is changing how videos are made, with companies using it to create content, personalize experiences, and even generate virtual actors. For instance, some platforms use AI to automatically edit videos or recommend scenes based on viewer preferences.

Moreover, AI can write scripts or create synthetic characters, revolutionizing content production.

AI-Generated Content Creation

The current state of AI video processing sees a remarkable rise in AI-generated content creation. Advanced AI assistants now automate video creation processes, like editing, scripting, and even generating synthetic voices for narration. These tools can swiftly produce videos by analyzing input data, selecting relevant clips, and stitching them together seamlessly.

This dramatically accelerates production, making it easier and faster to create engaging content tailored to specific audiences. Furthermore, AI can generate realistic deepfakes for films or personalized videos, although ethical considerations are vital in such applications.

This evolution in AI capabilities is set to revolutionize the media landscape, offering diverse possibilities for enhancing product features and user experiences.

Personalized Video Experiences

Personalized video experiences are becoming increasingly common, thanks to advancements in AI video processing. Companies like Netflix use AI to tailor video experiences, recommending shows based on viewing history.

Interactive video platforms, like Eko, allow users to shape storylines, enhancing engagement. AI can analyze viewer behavior, adjusting content in real-time to keep audiences hooked.

This technology is transforming how viewers consume and interact with digital content, making it more immersive and personalized than ever before.

Virtual Actors and Automated Scriptwriting

One rapidly evolving area of video processing is the use of virtual actors and automated scriptwriting. AI can now generate realistic virtual actors for video content, reducing production costs. Here's what's happening:

- AI-Generated Actors: Companies are using AI to create digital actors that can star in movies or ads.

- Automated Scripts: AI can write scripts for videos, even personalizing them for ai-powered meeting tools.

- Real-Time Rendering: Virtual actors can be rendered in real-time, allowing for interactive experiences.

This technology is already used in commercials and films, with some virtual actors even becoming influencers.

Advanced Technical Capabilities and Infrastructure

By 2025, AI video processing is set to see major advances in next-generation processing technologies. These advancements focus on boosting video quality and optimization, making videos load faster and look better for end users. Generative AI and large language models are revolutionizing video creation and understanding by producing highly realistic videos and enhancing user experience (Zhou et al., 2024).

For instance, new tools can automatically enhance video resolution and reduce buffering times, greatly improving user experience, while providing new layers of interaction with visual content.

Next-Generation Processing Technologies

By 2025, AI video processing is set to see major advancements in GPU technology, which will enhance edge computing integration for faster local processing.

The selection of AI models and frameworks is evolving, with developers choosing more efficient and lightweight options for better performance.

Real-time processing improvements are on the horizon, enabling smoother and quicker video analysis and rendering for end-users.

GPU Advancements and Edge Computing Integration

As the demand for AI video processing surges, GPU advancements and edge computing integration are becoming vital for next-generation processing technologies. These advancements enable real-time video streaming and efficient cloud storage, enhancing user experiences.

Key developments include:

- Faster Processing Speeds: Modern GPUs can handle more data quickly, making video analysis and rendering smoother.

- Reduced Latency: Edge computing brings processing closer to the data source, minimizing delays in video streaming.

- Energy Efficiency: New GPUs and edge devices are designed to use less power, making them more sustainable for long-term use.

These improvements guarantee that AI video processing is not only faster but also more dependable for end users. With edge computing, data doesn't need to travel far to the cloud storage, making the process quicker and more efficient.

This integration is vital for applications like live sports broadcasts, where every second counts. Developers are excited about these capabilities, as they open up new possibilities for innovative video applications.

AI Models and Framework Selection

How does one choose the right AI models and frameworks for video processing in 2025? It's about balancing intricacy, speed, and accuracy for applications like video conferencing and enterprise video. Different AI models handle object detection, scene understanding, and speech transcription, essential for these tasks. Here's a table of popular options:

In 2025, developers might lean towards PyTorch for its ease, but TensorFlow remains strong due to its large user base. OpenCV is chosen for its real-time processing capabilities, especially in video conferencing.

Real-Time Processing Improvements

In 2025, as AI video processing demands grow, real-time capabilities are becoming increasingly essential. Developers are focusing on next-generation processing technologies to handle tasks like live video streaming, instant object detection, and real-time speech transcription.

These advancements are enhancing the meeting experience with HD video clarity. Notable improvements include:

- Edge Computing: Processing data closer to the source reduces latency, making real-time analysis faster.

- Hardware Accelerators: Specialized chips like GPUs and TPUs boost computational speed for AI tasks.

- Algorithm Optimization: Streamlined algorithms efficiently handle complex tasks, ensuring smooth and quick processing.

Quality Enhancement and Optimization

As video streaming gains popularity, AI's role in enhancing quality becomes vital.

Techniques like Super Resolution and Upscaling are making videos sharper and clearer, even on slow networks.

Plus, Flexible Bitrate Streaming and Mobile Device Optimization are ensuring smooth playback, no matter where you're watching.

Super Resolution and Upscaling Techniques

By 2025, super resolution and upscaling techniques are expected to especially enhance video processing capabilities. These methods increase the clarity and detail of video messages, improving how users experience visual content. Here are key developments in this area:

- AI-Driven Upscaling: AI algorithms analyze low-resolution videos and predict high-resolution details, making video management more efficient.

- Real-Time Processing: Advanced GPUs and neural networks enable real-time super resolution, enhancing live streams and gaming.

- Cross-Platform Compatibility: These techniques are being integrated into various devices and platforms, ensuring consistent quality across different displays and systems.

Adaptive Bitrate Streaming

Beyond enhancing video clarity through super resolution, another flexible area of advancement is Adaptive Bitrate Streaming (ABRS). ABRS automatically adjusts the quality of a video stream in real-time based on the viewer's internet speed. This guarantees smooth video delivery without buffering. It's vital for video platforms aiming to provide uninterrupted viewing experiences.

Below is a comparison of ABRS with traditional streaming:

ABRS uses algorithms to monitor the user's internet speed and select the best video quality for seamless playback. This makes it ideal for modern applications where internet speeds vary. It's a key development option for product owners wanting to improve video delivery for end users.

Mobile Device Optimization

While video platforms have made notable strides in enhancing user experience, a critical area that deserves attention is Mobile Device Optimization. Modern video conferencing tools and video collaboration platforms are focusing on:

- Reducing Battery Usage: By using AI to manage background processes and optimize video rendering.

- Improving Video Quality: Through smart resolution adjustments based on device and network capabilities.

- Efficient Bandwidth Usage: Applying machine learning to predict and reduce data consumption without compromising quality.

These advancements aim to enhance the overall user experience on mobile devices, making video communication more seamless and efficient.

Implementation Strategies for 2025

By 2025, companies are looking at different ways to put AI video processing into their products. This includes using the best practices for development, like using easy-to-understand code and designing with users in mind.

Furthermore, companies are considering costs and potential returns, as AI can be pricey but also improve user experience and satisfaction.

Development Best Practices

By 2025, AI video processing systems will need solid infrastructure to handle more data and users, meaning servers and networks must scale up efficiently.

These systems also need to work well with existing tools, so integration is smooth and seamless.

Monitoring performance and optimizing processes will be vital for keeping everything running quickly and effectively.

Infrastructure Requirements and Scaling

What infrastructure does AI video processing need in 2025? To handle vast amounts of data, systems need dependable video hosting and efficient video distribution platforms. Key components include:

- High-Performance Computing (HPC): Ensures fast processing of large video files.

- Scalable Storage Solutions: Stores massive datasets without slowing down the system.

- Edge Computing: Reduces latency by processing data closer to where it's collected.

Integrating these allows seamless scaling, ensuring smooth user experiences as demand grows. Multi-cloud strategies enhance flexibility, letting developers switch between different cloud providers based on need. This setup supports continuous development and updates, keeping the platform responsive and up-to-date.

Integration with Existing Systems

Although video processing systems are becoming more advanced, integrating AI video processing with existing systems in 2025 will be crucial for enhancing product capabilities. Developers are focusing on a seamless video workflow that harnesses AI to improve video conferencing services. This integration can be achieved through various strategies that guarantee compatibility and efficiency.

Performance Monitoring and Optimization

To guarantee that AI video processing systems run smoothly in 2025, developers must focus on performance monitoring and optimization. Key areas include:

- Efficient Resource Allocation: Ensuring that video collaboration tools use only the necessary computational capacity, especially for tasks like meeting analytics.

- Real-Time Monitoring: Continuous tracking of system performance to quickly identify and address any issues.

- Adaptive Optimization: Automatically adjusting settings based on current usage patterns to enhance user experience.

These strategies help developers create more reliable and user-friendly AI video processing systems.

Cost Considerations and ROI

AI video processing projects in 2025 will need to consider budget planning for different scales, with small teams requiring fewer resources than enterprise solutions.

Resource allocation guidelines suggest that hardware costs, software licenses, and personnel expenses will make up the bulk of expenditures.

The expected return on investment (ROI) is influenced by factors such as improved user engagement, enhanced video quality, and the creation of new revenue streams through personalized content.

Budget Planning for Different Scales

Implementing AI video processing can range from small-scale projects to large, intricate systems, and the budget can vary greatly depending on the scope. For a basic setup, like enhancing video quality in a remote work environment, you might see costs starting around $10,000.

Medium-scale projects, such as adding real-time transcription to enterprise video conferencing, can range from $50,000 to $200,000.

Large-scale, custom systems that include advanced features like object detection or predictive analytics can surpass $1 million.

Factors influencing cost include:

- Data Volume: More data means more processing capacity and storage, which drives up the expense.

- Model Complexity: Simple algorithms cost less, while intricate models that require extensive training and tuning can be pricier.

- Integration Needs: Standalone solutions are cheaper, but integrating AI into existing systems can add considerable expenditure.

Different scales offer various capabilities, catering to diverse end-user needs.

Resource Allocation Guidelines

When considering resource distribution for AI video processing projects in 2025, it's vital to understand the various components that contribute to cost and how they can impact the return on investment (ROI).

Effective team collaboration is pivotal for optimizing resource allocation.

Hardware, software licenses, cloud services, and data storage are major cost factors.

Improved algorithms and better team coordination can reduce processing time, lowering costs.

Enhanced AI models can automate tasks, requiring less manual input and increasing efficiency.

Companies focusing on strategic resource allocation see improved product performance for end users.

Expected Return on Investment

Maximizing return on investment (ROI) for AI video processing projects in 2025 involves careful planning and strategic implementation. Companies are investing in AI-driven meeting recording and video content management systems to streamline their workflows.

Key areas of focus include:

1. Enhanced Efficiency: Automating video processing tasks reduces manual effort, freeing up time for other essential activities. For instance, AI can transcribe meetings, tag video content, and automatically generate summaries.

2. Improved User Experience: Advanced AI algorithms can analyze user behaviors to recommend relevant video content, enhancing the end-user experience. Personalization features can notably boost engagement.

3. Advanced Analytics: Utilizing AI to analyze video content can provide actionable insights. For example, sentiment analysis during meetings can help businesses understand employee morale better.

AI Video Processing Technology Explorer 2025

Explore the cutting-edge AI video processing technologies mentioned in this article through an interactive timeline. Navigate through different innovation areas - from content creation to quality enhancement - and discover how each technology impacts real-world applications. This visual tool helps you understand the progression and interconnections between various AI video processing capabilities that are shaping the industry by 2025.

Frequently Asked Questions

Will AI Replace Human Video Editors?

While AI can automate many video editing tasks, it is unlikely to fully replace human video editors. Creativity, complex decision-making, and client interaction remain areas where humans excel. Instead, AI will likely augment and assist video editors, enhancing efficiency and enabling new possibilities. However, in simple, repetitive tasks, AI could independently perform well.

What Are the Ethical Implications of AI in Video Processing?

The ethical considerations of AI in video processing include potential job displacement for human editors, deepfake generation leading to misinformation, and privacy concerns regarding automated surveillance and facial recognition. Moreover, biases in AI algorithms can result in unfair or discriminatory outcomes in content moderation and other applications. Unaddressed, these issues could lead to substantial societal harms, underscoring the need for responsible development and regulation. Balancing innovation with ethical considerations will be vital as AI continues to advance in this field.

How Will AI Video Processing Impact Privacy?

The integration of AI in video processing can greatly impact privacy, as it enables more efficient and extensive data collection, recognition, and tracking. Without solid regulations, it may lead to increased surveillance, potential misuse of personal data, and invasion of privacy. Ensuring transparency and consent in data usage is vital.

Can AI Generate Entirely New Video Content?

The generation of entirely new video content by AI is indeed possible. Advances in deep learning, such as Generative Adversarial Networks (GANs), enable AI to create synthetic yet realistic videos. These technologies can produce new scenes, replicate styles, and even generate convincing human faces and actions. However, ethical considerations and potential misuse, like deepfakes, should be carefully managed.

What Job Opportunities Will AI Video Processing Create?

AI video processing has the potential to create various job opportunities. Positions such as AI video analysts, who interpret and tag video data, and machine learning engineers specializing in video algorithms are expected to be in demand. Furthermore, roles like AI-assisted video editors and content creators who utilize AI tools for enhanced production will likely emerge. The need for ethical AI specialists to address privacy concerns in video processing will also rise. Educators and trainers who can upskill workers in these new tools and technologies will be essential. Ultimately, jobs in infrastructure management to support the increased data processing requirements will be created, particularly in areas like network optimization and secure protocols such as SSH Websocket for handling sensitive video data.

To Sum Up

By 2025, AI video processing will be everywhere, making videos look better and load faster. It's already helping apps like TikTok and Instagram with features like face filters. Future tech will include stuff like AI that can understand what's happening in videos, not just identify faces. This means better content for users, like super clear videos and personalized recommendations. Plus, new tools will make it easier for developers to add these cool features to apps.

References

Andrade, E., Ndachena, A., & Macz, B., et al. (2023). Custom ASICs for Data Center Video Processing: Advancements in AI-ML Integrated, High-Performance VPUs for Hyper-Scaled Platforms. SPIE Proceedings. https://doi.org/10.1117/12.2685872

Khan, K. (2023). Adaptive Video Streaming: Navigating Challenges, Embracing Personalization, and Charting Future Frontiers. International Transactions on Electrical Engineering and Computing, 2(4), 172-182. https://doi.org/10.62760/iteecs.2.4.2023.63

Zhou, P., Wang, L., & Liu, Z., et al. (2024). A Survey on Generative AI and LLM for Video Generation, Understanding, and Streaming. TechRxiv Preprint. https://doi.org/10.36227/techrxiv.171172801.19993069/v1

.avif)

Comments