If you’re building an app where users talk, listen, or collaborate live, the OpenAI Realtime API can change the game. It lets your product process and respond to audio instantly, like having a real-time AI brain plugged into every conversation.

For startups and SaaS platforms, that means faster response times, smarter interactions, and more engaging user experiences. Imagine turning a basic video call into one where AI transcribes notes, suggests answers, or even joins the chat with natural voice replies.

As a top software company with two decades in video and voice streaming and deep experience with WebRTC since its early days, we’ve seen firsthand how real-time AI can take a simple call or chat and turn it into a dynamic, human-like exchange.

Let’s explore the basic implementation for WebRTC, SIP, and WebSocket in your app.

⚙️Here’s more about our WebRTC Development Services

Key Takeaways

The OpenAI Realtime API makes voice AI dead simple across WebRTC for browser apps, SIP for phone systems, and WebSockets for flexible integrations. Grab the code examples below to spin up working prototypes in hours, not weeks.

With WebRTC hitting 8 billion devices by the end of 2025 and the market growing at a CAGR of almost 62.6%, adding real-time AI now gives your SaaS or startup a serious edge in user engagement and retention.

For high-volume apps, go gpt-realtime-mini at $0.16/min; for advanced reasoning, pick gpt-realtime at $0.18/min.

Understanding How the OpenAI Realtime API Works

The Realtime API is built for live interaction. It streams audio and text back and forth using WebSockets or WebRTC, powering use cases like real-time transcription, AI assistants, and automated call handling. You connect to a session, send user audio in real time, and get instant speech or text responses from models like gpt-realtime and gpt-realtime-mini.

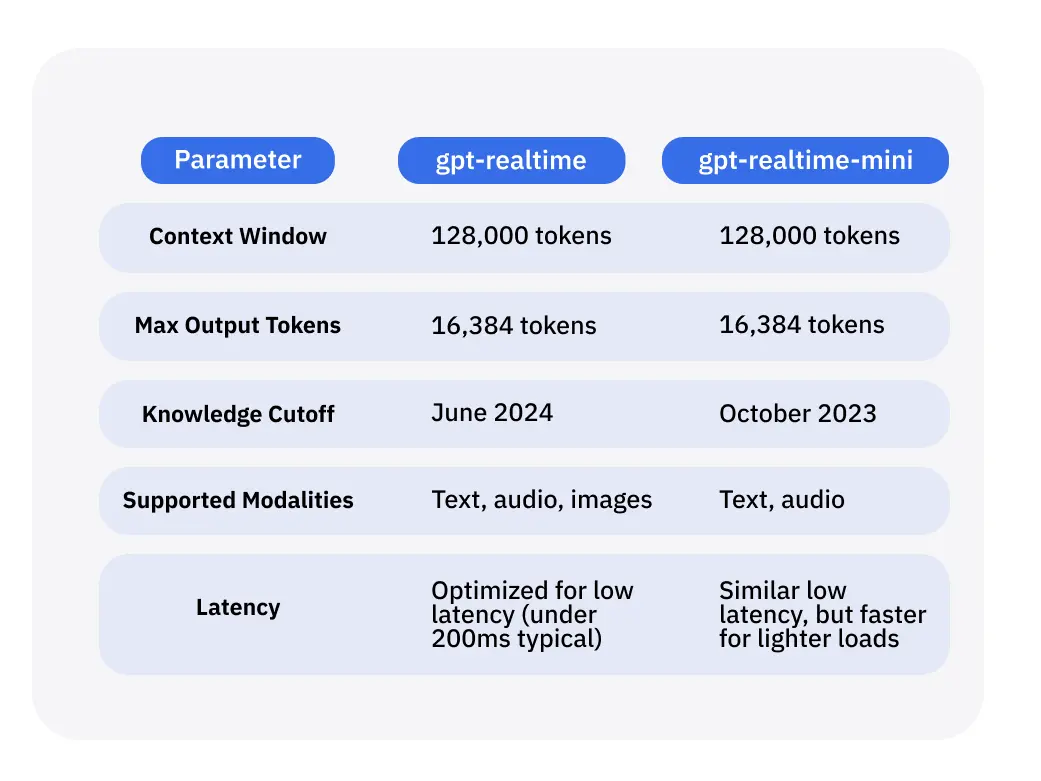

To get started, you’ll need an OpenAI API key. Here’s the official guide to getting API key. The API supports models like gpt-realtime and gpt-realtime-mini, with the full model offering stronger reasoning and natural speech for complex tasks, while the mini version provides a cost-efficient alternative for simpler applications.

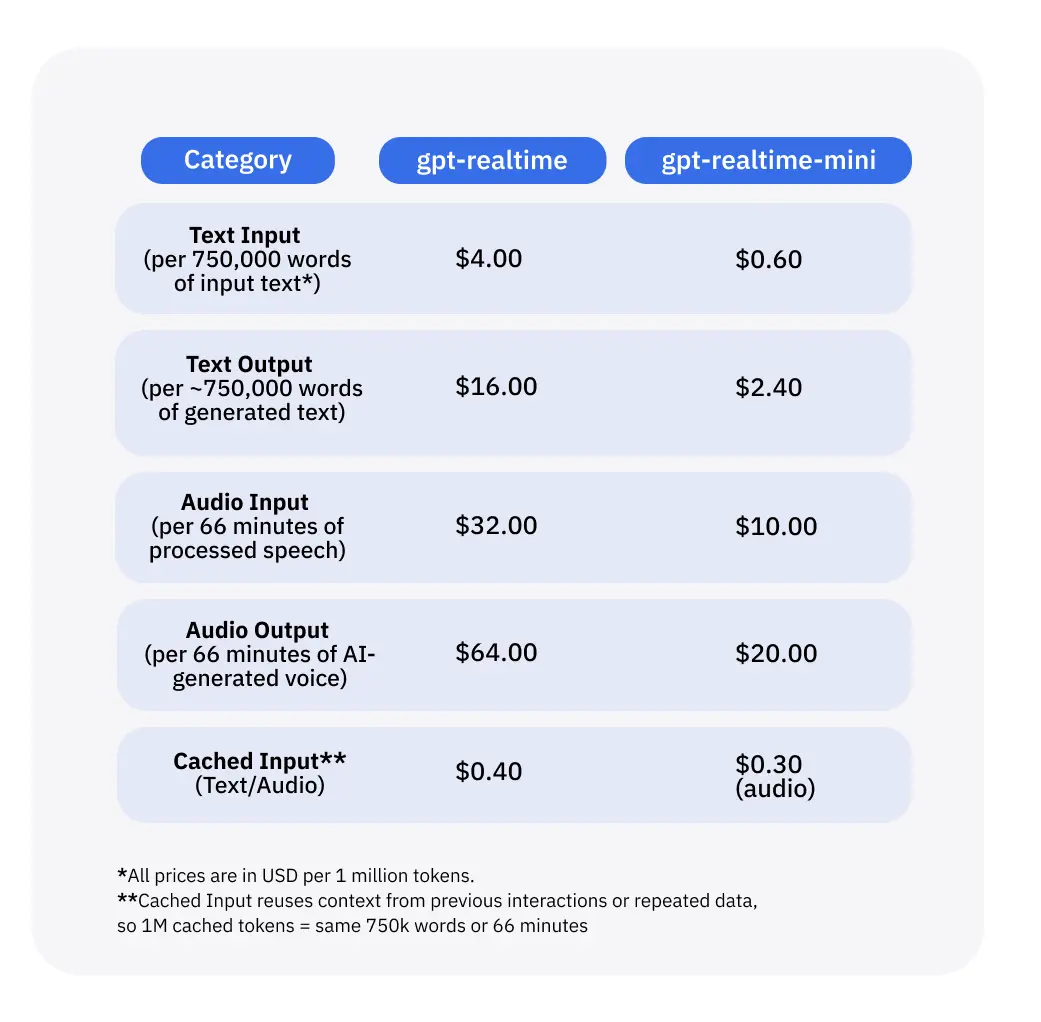

Here's a comparison of their key parameters and pricing, based on token counts where audio is tokenized at rates separate from text in some cases:

For pricing, gpt-realtime costs $4.00 per 1M text input tokens, $16.00 per 1M text output tokens, with audio at $32 per 1M input tokens and $64 per 1M output tokens. Cached inputs are cheaper at $0.40 per 1M for text or audio.

The mini model is more affordable at $0.60 per 1M text input tokens, $2.40 per 1M text output tokens, audio input at $10 per 1M tokens, and audio output at $20 per 1M tokens, with cached audio input at $0.30 per 1M.

Quick Insights: The mini model runs at ~$0.16 per minute for basic conversations (no system prompts), vs. $0.18 for gpt-realtime. Pick gpt-realtime for advanced reasoning, mini for high-volume efficiency.

Using WebRTC for Browser-Based AI Voice

If you’re targeting browser users, WebRTC is your best friend. It’s built into all major browsers and supports peer-to-peer video and audio without plugins. Pairing it with OpenAI’s Realtime API gives you a voice interface that feels as natural as human conversation.

Beyond simple chats, this combo shines in apps like virtual meetings or live coaching, where AI can analyze tone or give feedback in real time.

The setup is straightforward: capture the user’s microphone input with getUserMedia(), stream it through WebRTC to your backend, and forward that stream to OpenAI using a secure WebSocket. The API returns AI-generated audio almost instantly, which you play on the client. Handle echo cancellation through WebRTC and test on different devices for stability.

This setup can reach sub-200 ms latency – fast enough for natural-feeling dialogue. With WebRTC projected to power over 8 billion devices by the end of 2025, scalability is a given.

Client-Side Example

navigator.mediaDevices.getUserMedia({ audio: true })

.then(stream => {

const mediaRecorder = new MediaRecorder(stream, { mimeType: 'audio/webm' });

mediaRecorder.ondataavailable = event => {

// Send binary data to your backend WebSocket

ws.send(event.data);

};

mediaRecorder.start(100); // Send audio every 100ms

})

.catch(err => console.error('Mic access denied:', err));

Server-Side Example (Node.js)

import WebSocket from 'ws';

const ws = new WebSocket(

'wss://api.openai.com/v1/realtime?model=gpt-realtime',

{

headers: {

Authorization: `Bearer ${process.env.OPENAI_API_KEY}`,

'OpenAI-Beta': 'realtime=v1'

}

}

);

ws.on('open', () => {

ws.send(JSON.stringify({

type: 'session.update',

session: {

model: 'gpt-realtime',

turn_detection: { type: 'server_vad' },

modalities: ['text', 'audio']

}

}));

});

ws.on('message', message => {

const data = JSON.parse(message.toString());

if (data.type === 'response.audio.delta') {

// Stream AI audio response back to client

console.log('AI speaking:', data.delta);

// Send binary audio to WebRTC client

}

if (data.type === 'response.text.delta') {

// Handle text transcription

console.log('AI text:', data.delta);

}

if (data.type === 'conversation.item.input_audio_buffer.speech_stopped') {

// User finished speaking, AI will respond

console.log('User done speaking');

}

});

This prototype gets you live audio streaming in minutes.

Connecting OpenAI Realtime API with SIP

For telephony-based products, SIP (Session Initiation Protocol) is still the backbone of voice infrastructure. It manages call setup and routing between devices. The OpenAI Realtime API can sit between your SIP gateway and your app to inject AI intelligence into phone calls.

It’s ideal for call centers or IVR systems where AI can answer routine questions, escalate calls, or even translate conversations in real time. You can bridge SIP with WebSockets through providers like Twilio or Asterisk. The audio (RTP stream) passes through your SIP trunk, and your server forwards it to OpenAI for processing.

Make sure to convert RTP packets into a compatible format, 16-bit PCM, 24 kHz mono, before streaming.

Here’s a simplified Python bridge using Twilio for SIP and websockets for OpenAI:

import asyncio, json, os, websockets

from twilio.rest import Client

client = Client('account_sid', 'auth_token')

call = client.calls.create(

to='sip:your-endpoint',

from_='your-number',

url='https://your-server.com/audio-webhook'

)

async def connect_openai():

uri = 'wss://api.openai.com/v1/realtime?model=gpt-realtime'

async with websockets.connect(

uri,

extra_headers={

'Authorization': f'Bearer {os.getenv("OPENAI_API_KEY")}',

'OpenAI-Beta': 'realtime=v1'

}

) as ws:

await ws.send(json.dumps({'type': 'session.create'}))

# Stream audio data here (e.g. from RTP packets)

async for message in ws:

data = json.loads(message)

print(data)

asyncio.run(connect_openai())This setup lets you build AI-powered phone agents that answer or assist human operators live. We’ve implemented similar systems for SaaS clients to automate repetitive calls and reduce handling times.

WebSockets for App-Level Integration

WebSockets are ideal if you don’t need full peer-to-peer communication. They provide a persistent, bidirectional connection with the API – perfect for mobile or desktop apps that need flexible control.

You can stream audio, text, or JSON messages back and forth. It’s a good fit for chat tools, voice-enabled dashboards, or productivity apps.

Here’s a simple JavaScript example:

const ws = new WebSocket(

'wss://api.openai.com/v1/realtime?model=gpt-realtime',

{ headers: { Authorization: `Bearer ${OPENAI_API_KEY}`, 'OpenAI-Beta': 'realtime=v1' } }

);

ws.onopen = () => {

ws.send(JSON.stringify({

type: 'session.update',

session: { modalities: ['text', 'audio'] }

}));

};

navigator.mediaDevices.getUserMedia({ audio: true }).then(stream => {

const audioCtx = new AudioContext();

const source = audioCtx.createMediaStreamSource(stream);

const processor = audioCtx.createScriptProcessor(4096, 1, 1);

processor.onaudioprocess = e => {

const audioData = e.inputBuffer.getChannelData(0);

const pcm16 = new Int16Array(audioData.length);

for (let i = 0; i < audioData.length; i++)

pcm16[i] = Math.max(-1, Math.min(1, audioData[i])) * 0x7fff;

const base64Chunk = btoa(String.fromCharCode(...new Uint8Array(pcm16.buffer)));

ws.send(JSON.stringify({ type: 'input_audio_buffer.append', audio: base64Chunk }));

};

source.connect(processor);

processor.connect(audioCtx.destination);

});

ws.onmessage = event => {

const data = JSON.parse(event.data);

if (data.type === 'response.audio.delta') {

const audio = new Audio(`data:audio/wav;base64,${data.delta}`);

audio.play();

}

};This creates a direct, low-latency AI voice interaction in any app environment.

Practical Tips and Optimization

Real-time systems leave no room for lag. Keep audio chunks small, about 20 ms, and use token-based authentication for every session. Test different sample rates to balance speed and fidelity (OpenAI recommends 24 kHz).

For debugging, Chrome’s WebRTC Internals and Wireshark are invaluable. From our experience, building a lightweight prototype first cuts development time by about 50%.

Adding AI-driven features like sentiment detection or real-time translation boosts accessibility and service quality. The global WebRTC market hit $10.89 billion in 2025 and continues to grow at 37.28% CAGR, making this the right time to innovate.

FAQ

What models does the OpenAI Realtime API use?

Mainly gpt-realtime and gpr-realtime-mini, optimized for voice input and low-latency streaming output.

Can it handle function calls or structured responses?

Yes. Include a tool definition in your session and parse the response.done event for results.

Is it expensive?

Depends on usage: gpt-realtime runs ~$0.18/min for basic chats vs. $0.16/min for gpt-realtime-mini. Check full pricing here

Can I use it for group calls?

Not natively. Run one session per participant or mix multiple streams on your server.

What if latency spikes?

Use regional edge servers, small audio chunks, and persistent WebSocket connections to minimize delay.

Conclusion

Real-time voice AI isn’t just a shiny add-on; it’s the next competitive edge for apps that want to feel alive. With the OpenAI Realtime API, your product can listen, understand, and respond instantly across WebRTC, SIP, or WebSockets. That means smoother calls, smarter assistants, and customer interactions that actually feel human.

We’ve helped startups and SaaS teams turn early prototypes into production-ready voice features that boost engagement and retention.

⚙️Here’s more about our WebRTC Development Services

If you’re ready to build something that speaks your users’ language (literally), let’s talk. Drop us a line or book a consultation today, and we’ll help you map the fastest path from idea to live, talking product.

.avif)

Comments