Let’s be real: voice tech can be frustrating. Slow customer support, laggy calls, and chatbots that sound like robots drive customers away and cost you money. It’s a real problem.

But what if your AI could actually chat like a human: smoothly, in real time, without the awkward pauses?

Enter LiveKit Agents. It’s an open-source toolkit built on WebRTC that lets you create voice AI agents that feel natural. They handle conversations smoothly, scale easily, and can even connect to regular phone lines. The result? Better customer talks and lower costs. Simple as that.

And the timing couldn’t be better. Voice AI is exploding. By 2034, the market’s set to hit $47.5 billion. Most businesses (80% by the end of 2026) plan to use AI in customer service. Billions of voice assistants will be in use soon.

LiveKit fits right into this shift. It helps startups and SaaS teams build agents that deliver real results, like faster replies and happier users. Let’s break down how it works and if it’s right for you.

Key Takeaways

- LiveKit solves real voice headaches (delay, cost, and complexity) with agents that chat naturally.

- Big perks: Speed, flexibility (choose your providers), and hosting you control.

- Watch out for: Interruptions, API costs, and privacy setup. Plan ahead.

- It’s ready now: Powers big platforms and billions of calls.

- Adding voice AI can seriously level up your product, making it more engaging, helpful, and sticky.

What LiveKit + WebRTC Actually Do for Voice AI

LiveKit is an open-source platform that uses WebRTC, the tech behind smooth video chats in your browser, to power real-time voice and video. WebRTC lets devices talk directly, no plugins needed. LiveKit’s twist? It lets AI join conversations just like a person would, in virtual rooms where audio is processed live.

Why does this matter for your business?

Think customer support that answers instantly, telehealth appointments with natural back-and-forth, or sales calls where AI helps qualify leads without sounding scripted.

LiveKit started as a WebRTC tool and grew into a full AI framework. It now handles billions of calls a year. For founders and devs, it’s a way to add voice AI without starting from zero.

✅ Pros: Open-source means you can tweak everything.

⚠️ Cons: You’ll need some tech know-how to set it up, but once it’s live, scaling is straightforward.

Big names already use it. OpenAI runs ChatGPT’s Advanced Voice on LiveKit, serving millions of chats daily. That’s proof it can handle serious traffic.

Bottom line: LiveKit makes real-time voice AI way simpler, turning clunky systems into smooth conversations.

How LiveKit’s Voice Agents Actually Work

Here’s the flow:

Your AI agent joins a WebRTC room (just like a human user), grabs the audio stream, turns speech into text, thinks of a reply using a language model, and speaks back. All in real time.

The secret sauce? WebRTC’s edge routing, which cuts delay to under 1 second globally – way faster than older methods like WebSockets. This means conversations feel natural, not stilted.

📚Read our guide to QUIC and MoQ – next-gen protocol for real-time communication

Under the hood, features like voice activity detection (VAD) help the AI know when to talk and when to listen. New models detect speech in under 25ms across 13 languages.

You can plug in your favorite tools:

- Deepgram or AssemblyAI for speech-to-text

- OpenAI or Anthropic for the brain (LLM)

- Cartesia or ElevenLabs for the voice (TTS)

Mix and match to build a pipeline that fits your needs and budget.

✅ Pros: Swap providers to control costs and quality.

⚠️ Cons: Getting interruptions to feel natural takes a little tuning.

Performance? Think ~465ms response times (thanks AssemblyAI for the benchmark). That’s fast enough for real-time support or live meetings.

💡Curious how low latency could change your user experience? Let’s chat about your use case.

4 Types of Voice AI You Can Build with LiveKit

1. Conversational Assistants (using OpenAI Realtime)

These use OpenAI’s Realtime API for chat that feels almost human. They join rooms, listen, and reply instantly.

Great for: Support bots, friendly greeters, Q&A.

Setup tip: Use LiveKit’s OpenAI plugin; it’s basically plug-and-play.

✅ Super easy to get started.

⚠️ You’re relying on OpenAI’s uptime.

2. Custom Pipeline Agents (choose your own STT/LLM/TTS)

Prefer to mix and match? Build your own pipeline: pick a speech-to-text service, an LLM, and a voice model separately.

Great for: Specialized tasks, cost optimization, unique brand voices.

Example: A pizza ordering bot using Whisper (STT), GPT-4 (LLM), and a custom TTS.

✅ Total control at each step.

⚠️ Requires more setup and wiring.

3. Telephony Agents (for real phone calls)

Connect your AI to actual phone lines using SIP. Your agent can call customers or answer inbound calls.

Great for: Appointment reminders, customer support lines, lead follow-up.

Real use: Some teams even use this for high-stakes setups like emergency dispatch.

✅ Reach customers anywhere, phone or web.

⚠️ Needs some telecom config.

4. Multimodal Agents (video + voice)

Agents that can “see” video or screenshares while talking.

Great for: Interactive demos, meeting assistants, avatar-led experiences.

✅ Super engaging and interactive.

⚠️ Needs more bandwidth and computing power.

Why This Matters for Your Business

- Faster, smoother talks: Low latency means no awkward “are you there?” moments.

- No vendor lock-in: Open-source = you own the code. Self-host to save or use their Cloud to scale.

- Sounds human: Better voice quality keeps users engaged (think 30% faster support resolutions).

- Grows with you: Cloud scales automatically with 99.99% uptime.

- Works everywhere: Reach users on the web, in-app, or by plain old phone call.

The ROI? Deploy in minutes, cut support costs, and keep customers happier. E-commerce sites use it to guide shoppers. SaaS products add it for better onboarding.

Stats don’t lie: 88% of businesses using AI already see real benefits.

How to Build Your First Voice Agent (Step-by-Step)

1. Get access: Sign up at livekit.io (free for 1,000 minutes).

Tip: Start with Cloud to test fast.

2. Pick your approach:

- Use OpenAI Realtime for the simplest path.

- Build a custom pipeline if you want control.

3. Install stuff:

pip install "livekit-agents[openai,deepgram,cartesia]~=1.0"

4. Set keys securely: Add LIVEKIT_URL, API_KEY, plus keys for OpenAI/Deepgram/etc., to your environment. Don’t hardcode them!

5. Write your agent logic:

from livekit.agents import Agent

agent = Agent(instructions="You're a helpful support assistant.")

6. Connect to a room: Use AgentSession to wire up STT, LLM, TTS.

Tip: Test interruptions early.

7. Add a frontend (optional): Use LiveKit’s SDKs for web or mobile.

8. Test & deploy: Run locally with python my_agent.py dev, then deploy to Cloud when ready.

💡 Need a tailored roadmap for building your voice AI agent? Reach out!

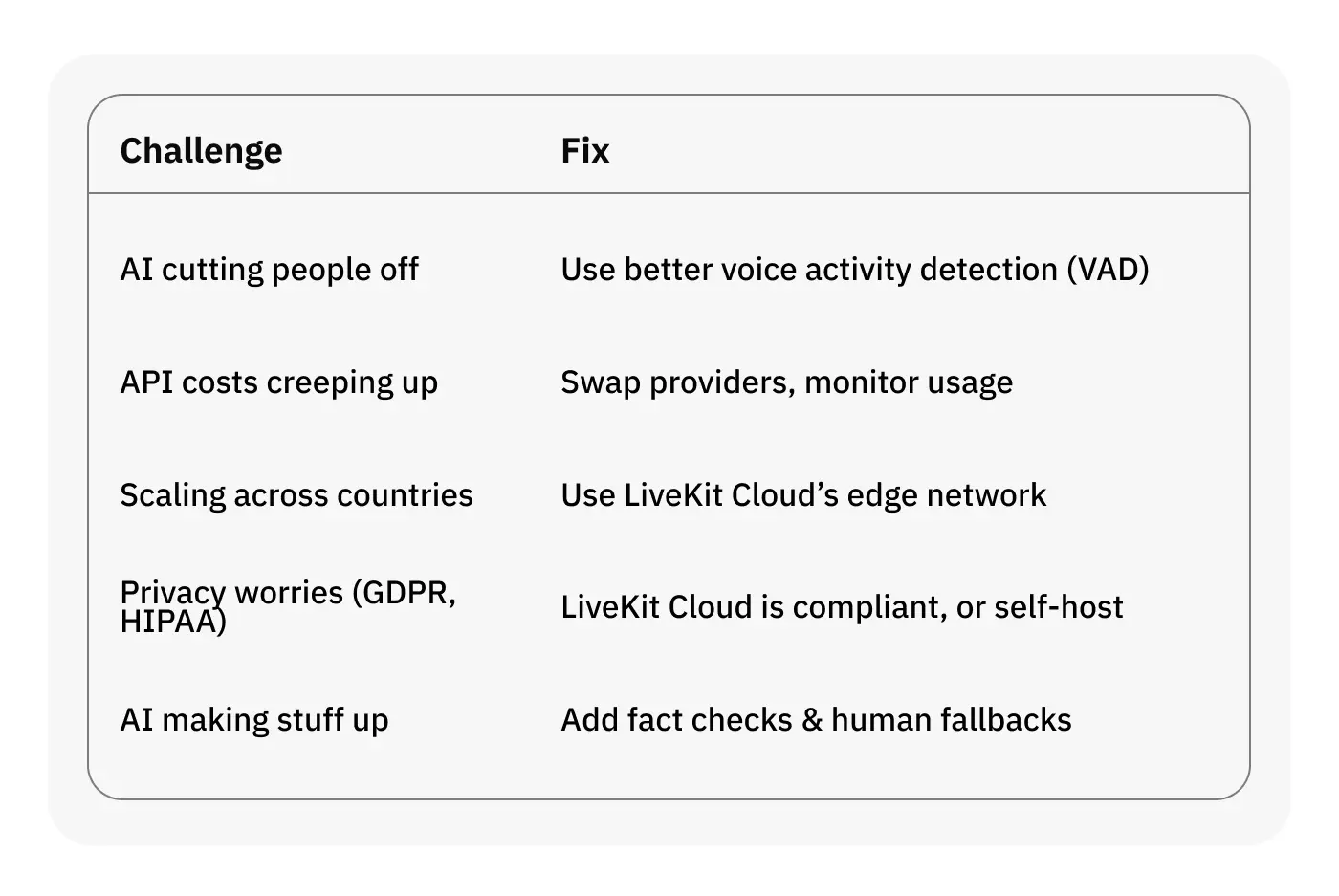

Common Hurdles (and How to Jump Them)

FAQ

How fast can I build an agent?

Depends on the complexity and grade. With AI experts, the timeline starts from 1 week for the first reliable results.

Does it work with OpenAI Realtime?

Yep, plugins included.

Cloud vs. self-host?

Cloud = easy. Self-host = more control.

Can I add it to my existing app?

Yes. Agents join like normal users.

What about phone calls?

SIP built-in. Connect to real phone lines.

Is it secure?

Yes. GDPR/SOC2/HIPAA-ready on Cloud.

Latency across countries?

Under 1 sec globally, often way less.

Can I test locally?

Yes, terminal-based testing included.

Next Steps

If you’re ready to bring your AI idea to life, drop us a line or book a consultation today. We’ll help you map out the fastest, most efficient path from idea to live AI product.

⚙️Learn more about our AI Development Services

.avif)

Comments