Ever wondered how companies spot weird patterns in their data before they become big problems? Machine learning for anomaly detection is like having a smart detective that never sleeps, always watching for anything unusual in your data. This guide walks you through the complete process of building such systems, from picking the right data signals to running them in real-time. You'll learn practical ways to use popular tools like isolation forest and one-class SVM, plus smart tricks to make them work better together. We'll show you how to make these systems run fast and efficiently, even with tons of data, and explain their findings in ways that make sense to everyone. Whether you're just starting out or looking to improve your existing setup, you'll finish this guide ready to build anomaly detection systems that actually work in the real world.

Key Takeaways

- Assess data characteristics, identify anomaly types, and consider computational efficiency when selecting machine learning algorithms for anomaly detection

- Implement key algorithms like Isolation Forest, One-Class SVM, and ensemble methods, while continuously evaluating their performance and fine-tuning them

- Enhance algorithm performance by exploring combinations, utilizing ensemble methods, and incorporating new techniques for improved precision and recall

- Ensure scalability and real-time detection by designing for distributed processing, optimizing memory usage, implementing online learning, and maintaining system responsiveness

- Provide interpretable results through effective visualizations, clear explanations, and user feedback mechanisms to foster trust and continuous model improvement

Assessing Data and Anomaly Types

Before selecting an anomaly detection algorithm, you'll want to evaluate the characteristics of your data and the types of anomalies you expect to encounter. Consider factors like the dimensionality and volume of your data, as well as whether anomalies are likely to be point anomalies, contextual anomalies, or collective anomalies. It's also important to assess the computational efficiency and scalability requirements for your use case, as some algorithms may perform better than others depending on the size and intricacy of your dataset.

🔍 Quick Reality Check: Feeling overwhelmed by anomaly detection complexity? You're not alone! While you're reading this comprehensive guide, why not schedule a quick chat with our AI experts? We've helped 450+ organizations implement these exact solutions. Book a free 30-minute consultation to discuss your specific use case.

Our Expertise in AI-Powered Anomaly Detection

At Fora Soft, we've been at the forefront of video surveillance and AI-powered multimedia solutions for over 19 years. Our expertise in implementing anomaly detection algorithms isn't just theoretical - we've successfully deployed these systems across 450+ client organizations, including police departments, medical institutions, and child advocacy centers. Our team's deep understanding of video streaming technology and AI implementation has been crucial in developing sophisticated anomaly detection systems that process multiple HD video streams in real-time.

What sets us apart is our focused expertise in multimedia and AI solutions. While many developers might struggle with selecting the right multimedia server or implementing efficient anomaly detection algorithms, our specialized experience allows us to optimize these systems for maximum performance and reliability. Our rigorous team selection process, where only 1 in 50 candidates receives an offer, ensures that we have the best minds working on these complex challenges. This expertise is reflected in our 100% average project success rating on Upwork, demonstrating our ability to deliver reliable and effective anomaly detection solutions.

💡 Curious about how we've implemented these solutions in real-world scenarios? Check out our portfolio of successful AI projects and see how we've helped organizations just like yours transform their video surveillance capabilities.

Evaluating Data Characteristics and Anomaly Categories

To apply machine learning algorithms for anomaly detection in your software product, you must first effectively evaluate the characteristics of your data and identify the types of anomalies you aim to detect. Consider the normal behavior patterns within your dataset, as this will help determine which anomaly detection methods are most suitable.

Analyze whether you're dealing with point anomalies, contextual anomalies, or collective anomalies, as each type requires different approaches. Unsupervised learning techniques, such as clustering and density estimation, can be particularly useful for identifying anomalous behavior without prior labeling. In fact, these methods have shown remarkable success in cybersecurity applications, achieving detection rates of up to 98% while maintaining false alarm rates around 1% (Jyothsna et al., 2011).

When implementing anomaly detection in video surveillance systems like our V.A.L.T platform, we found that contextual anomalies were particularly important for security monitoring. The system needed to distinguish between normal and suspicious activities in different contexts, such as during medical training sessions or police interrogations.

Considering Computational Efficiency and Scalability

When selecting machine learning algorithms for anomaly detection in your software product, computational efficiency and scalability are essential factors to take into account alongside data characteristics and anomaly types. You'll want to reflect on how the anomaly detection algorithms perform as data volumes grow.

Unsupervised learning methods like clustering and density-based techniques often scale better than supervised approaches. Think about the computational resources required and whether the algorithms can handle streaming data for real-time implementation. Anomaly detection in high-dimensional data can be especially computationally intensive. Choosing algorithms with lower time and space intricacy will help guarantee that your system remains responsive as it scales.

Techniques like dimensionality reduction, approximation methods, and distributed processing can help manage scalability challenges. Carefully evaluate efficiency and scalability to build a sturdy, performant anomaly detection system.

Implementing Key Algorithms

When it comes to implementing key algorithms for anomaly detection, you have several powerful options at your disposal. Isolation Forest is a highly effective algorithm for identifying outliers in your data, as it efficiently isolates anomalies by randomly partitioning the data points. On the other hand, if you're dealing with novelty detection, where the goal is to identify previously unseen patterns, One-Class SVM (Support Vector Machine) is a strong choice that learns the boundaries of the normal data and flags any instances that fall outside those boundaries.

Isolation Forest for Outlier Detection

Isolation Forest's unsupervised learning approach detects anomalies by isolating outliers using random feature splits, making it an efficient algorithm for identifying unusual patterns in your product's data. The algorithm demonstrates remarkable effectiveness when working with complex datasets, as it successfully identifies anomalies in high-dimensional data without suffering from the limitations that typically affect traditional distance or density-based methods (Bulut et al., 2024).

This outlier detection technique, as part of a suite of machine learning algorithms, constructs an ensemble of decision trees to partition data points. Anomalous instances require fewer splits to be isolated, as they differ notably from the majority of data.

By averaging the path lengths across the forest, you can assign anomaly scores to each data point, with shorter paths indicating a higher likelihood of being an outlier.

One-Class SVM for Novelty Detection

One-Class Support Vector Machine (SVM) is a powerful unsupervised learning algorithm that excels at novelty detection, making it an essential tool for identifying new or unusual patterns in your product's data. By training the one-class SVM model using only normal samples, it learns to define a boundary that includes the majority of the data points.

During the training process, the algorithm optimizes the boundary to maximize the margin between the normal samples and the origin in a high-dimensional feature space. While traditional approaches focused on maximizing the minimum margin, research indicates that considering the entire margin distribution yields more effective results (Zhang & Zhou, 2020). When new data is introduced to the trained model, it classifies points falling outside the learned boundary as anomalies.

This unsupervised anomaly detection approach is particularly useful when you have limited labeled anomaly data, enabling you to detect previously unseen anomalies in your product's data.

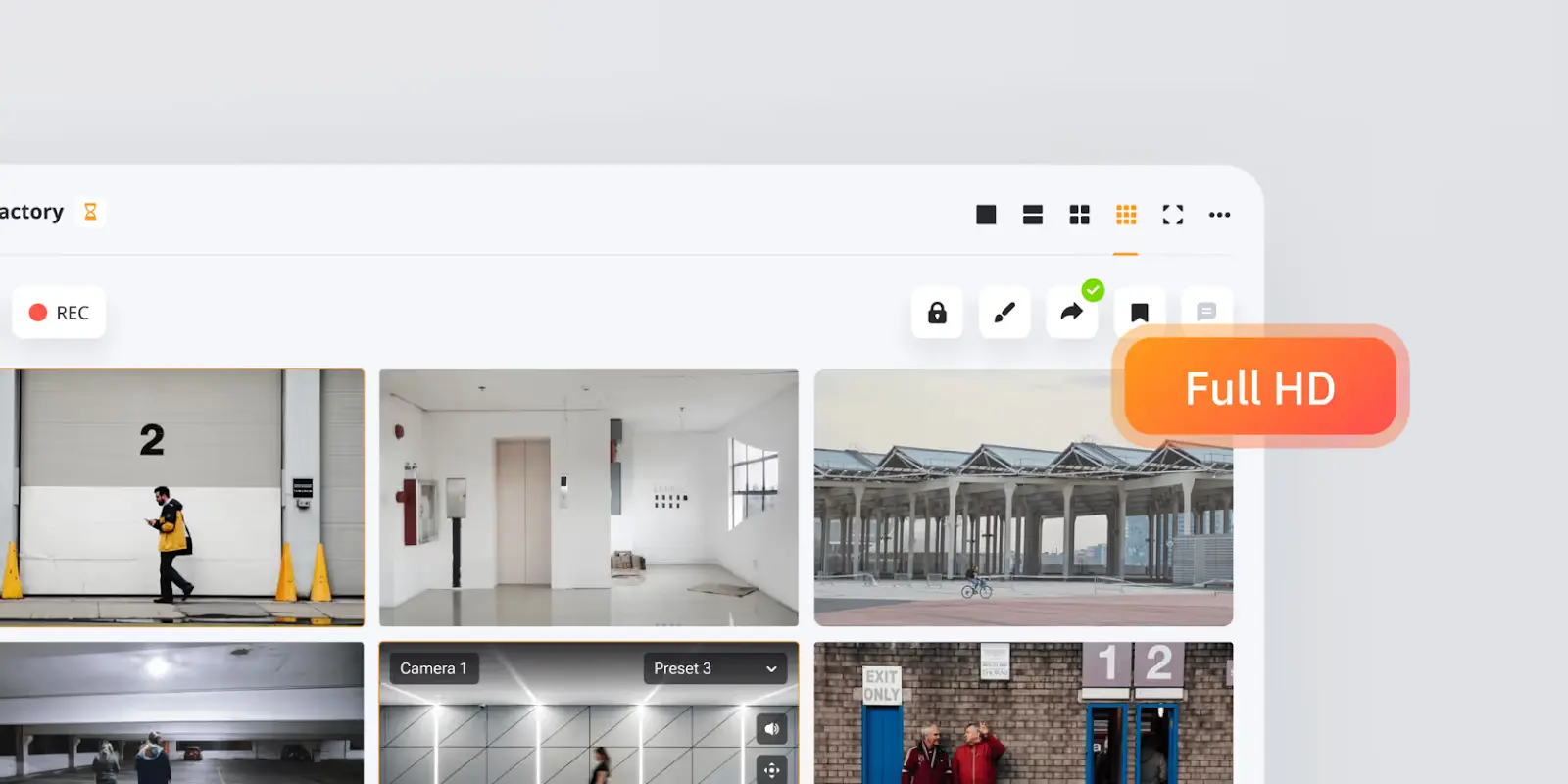

Real-World Application: V.A.L.T Video Surveillance System

In developing our V.A.L.T video surveillance system, we implemented sophisticated anomaly detection algorithms to ensure security and quality assurance in sensitive environments. Our experience with police departments, medical institutions, and child advocacy organizations taught us the importance of real-time anomaly detection in video streams. We designed the system to detect unusual patterns in surveillance footage, particularly during crucial moments like police interrogations or medical training sessions. The system's ability to mark and report anomalies in real-time proved essential for our clients, who needed to quickly identify and respond to irregular events without rewatching hours of footage.

🎯 Ready to elevate your surveillance system? Our team has spent 19 years perfecting AI-powered video solutions. Explore our AI integration services or drop us a message to discuss your project needs.

Enhancing Algorithm Performance

To enhance the performance of your anomaly detection algorithms, you can explore combining multiple algorithms and using ensemble methods. Continuously evaluate the performance of your algorithms using appropriate metrics and benchmarks, and refine them based on the understanding gained. By iteratively improving your algorithms and modifying them to evolving data patterns, you can guarantee the best anomaly detection capabilities for your product and deliver a more reliable and effective solution to your end users.

Combining Algorithms and Ensemble Methods

Ensemble methods and algorithm combinations supercharge anomaly detection systems, enabling them to catch sneaky outliers that might slip past a single model. By utilizing the strengths of different approaches like neural networks and feature selection, you can build a strong predictive maintenance solution.

Ensemble methods combine the outputs of multiple anomaly detection algorithms, capitalizing on their unique capabilities to flag potential issues. This layered defense helps guarantee that no abnormality goes unnoticed, with studies showing these methods can effectively reduce false positive rates through diverse decision-making approaches (Zhang et al., 2020).

When designing your detection system, carefully consider which algorithm combinations will provide the most thorough coverage for your specific data and use case. Experimenting with various ensembles and evaluating their performance will help you zero in on the best configuration. With the right mix of methods, you'll be well-equipped to proactively identify and address anomalies.

Continuous Evaluation and Refinement

Continuously monitor and fine-tune your anomaly detection algorithms to guarantee they're delivering peak performance and adjusting to changing data patterns. Implement a process for continuous evaluation, evaluating the accuracy and operational efficiency of your models in real-time. Regularly review the results of your anomaly detection process, identifying areas for improvement and making necessary adjustments.

Consider incorporating new machine learning techniques or refining existing ones to enhance the precision and recall of your algorithms. By proactively monitoring and optimizing your models, you'll ensure they remain effective and efficient over time, even as data patterns evolve.

This ongoing refinement process is essential for maintaining the reliability and value of your anomaly detection system, helping you stay ahead of potential issues and deliver the best possible results for your users.

Our experience with the V.A.L.T system demonstrated the importance of continuous refinement in anomaly detection algorithms. The system's ability to handle multiple HD video streams while maintaining detection accuracy required regular optimization of our algorithms, especially when scaling to support 450+ client organizations.

Ensuring Scalability and Real-Time Detection

When designing anomaly detection algorithms for your product, it's essential to take into account scalability and real-time performance. You can achieve this by utilizing distributed processing frameworks and online learning techniques that allow the algorithm to adjust to new data in real-time. Additionally, optimizing the algorithm for memory usage and low latency guarantees that it can handle large-scale datasets and provide timely detection results.

Distributed Processing and Online Learning

Tackle scalability and real-time anomaly detection head-on by utilizing distributed processing and online learning techniques. You can process massive amounts of data in parallel by distributing the workload across multiple machines or clusters. This allows your anomaly detection systems to handle large-scale datasets efficiently.

Online learning enables your machine learning models to continuously modify and learn from new data points in real-time, without needing to retrain the entire model from scratch. Studies in natural language processing have shown that small perturbations in training data can lead to significantly different model predictions, highlighting the critical importance of robust online learning approaches (Leszczynski et al., 2020). By combining distributed processing with online learning, you can build highly scalable and responsive anomaly detection systems that can handle growing data volumes and detect anomalies as they occur.

Optimizing for Memory Usage and Low Latency

To guarantee that your anomaly detection system remains highly performant and responsive, you'll need to optimize for memory usage and low latency. By carefully selecting machine learning algorithms like random forests that are efficient in both memory and computation, you can be sure your anomaly detection loop runs smoothly without bottlenecks.

Here are some key strategies to reflect on:

- Profile and benchmark your code to identify memory and latency hotspots

- Employ techniques like data subsampling and feature selection to reduce model size

- Utilize distributed processing frameworks to parallelize model training and inference

Providing Interpretable Results

When implementing anomaly detection algorithms, you should prioritize providing interpretable results to end users. Visualizing identified anomalies and offering clear explanations for why they were flagged can help users understand and trust the system's outputs. Additionally, enabling user feedback mechanisms allows for continuous improvement of the underlying models based on real-world observations and domain expertise.

Visualizing Anomalies and Offering Clear Explanations

Visualize anomalies and provide clear explanations to help users quickly understand and act on the observations surfaced by your machine learning algorithms.

When presenting the results of your anomaly detection task, consider these key points:

- Employ intuitive visualizations like heat maps, scatter plots, or interactive dashboards to highlight anomalous data points and patterns

- Accompany visualizations with concise explanations that describe the nature of the anomaly, its potential impact, and recommended actions

- Ascertain your explanations are accessible to non-technical stakeholders by minimizing jargon and focusing on the practical consequences of the detected anomalies

In implementing the V.A.L.T platform, we developed an intuitive marking system that allows users to flag and annotate anomalies in real-time, generating searchable logs and PDF reports for stakeholders. This practical application showed how vital clear visualization and explanation of anomalies are in high-stakes environments like police departments and medical institutions.

Enabling User Feedback for Model Improvement

Interpretability forms the bedrock of trust between your anomaly detection system and its users, so prioritize techniques that allow them to understand and provide feedback on the model's results. When implementing machine learning anomaly detection with unsupervised learning algorithms, create clear visualizations and explanations that allow users to grasp why certain data points were flagged as anomalies. This transparency will encourage user feedback, which you can then utilize to fine-tune your model's performance.

Consider incorporating an intuitive interface that lets users easily report false positives or negatives and use this significant input to retrain your algorithms.

Interactive Anomaly Detection Algorithm Selector

Choosing the right anomaly detection algorithm is crucial for effective implementation. This interactive tool helps you navigate the complex landscape of machine learning algorithms for anomaly detection based on your specific data characteristics and requirements. Answer a few questions about your use case to receive tailored algorithm recommendations that align with your needs.

Frequently Asked Questions

How Can Anomaly Detection Algorithms Handle Imbalanced Datasets Effectively?

To effectively handle imbalanced datasets, you can use techniques like oversampling the minority class, undersampling the majority class, or applying cost-sensitive learning. These methods help balance the class distribution and improve anomaly detection performance.

What Are the Best Practices for Feature Engineering in Anomaly Detection?

To boost anomaly detection, engineers informative features that capture unique patterns. Combine domain expertise with statistical techniques like PCA. Iteratively refine features based on model performance. Regularly update features as data evolves to maintain accuracy.

How Do Unsupervised and Semi-Supervised Algorithms Compare in Anomaly Detection Performance?

Unsupervised algorithms excel at detecting novel anomalies, but they're prone to false positives. Semi-supervised methods utilize labeled data to improve accuracy, but they can miss new anomaly types. Your choice depends on your data and goals.

What Are the Trade-Offs Between Batch and Streaming Anomaly Detection Approaches?

Batch processing offers higher accuracy but slower results. Streaming enables real-time detection but may compromise precision. Consider your latency requirements and available data when choosing between batch and streaming for your anomaly detection system.

How Can Anomaly Detection Systems Be Integrated With Existing Software Infrastructure?

To integrate anomaly detection with your existing software, use APIs or microservices for modular deployment. Ascertain data pipelines feed detection models seamlessly. Incorporate alerts into your monitoring stack and provide user-friendly configuration options.

To Sum Up

You now possess the knowledge to utilize machine learning for powerful anomaly detection in your products. By carefully evaluating your data, selecting ideal algorithms, and fine-tuning their performance, you can build scalable systems that identify anomalies in real-time. Remember to preprocess data effectively, choose relevant features, and evaluate models thoroughly. With interpretable results and strong implementations, anomaly detection will take your offerings to new heights.

🚀 Don't let complex AI implementation hold your project back. Our team is ready to help you build a robust anomaly detection system that actually works. Schedule your free consultation now, and let's turn your vision into reality.

References

Bulut, O., Gorgun, G., & He, S. (2024). Unsupervised anomaly detection in sequential process data. Zeitschrift für Psychologie, 232(2), 74-94. https://doi.org/10.1027/2151-2604/a000558

Jyothsna, V., Prasad, V., & Prasad, K. (2011). A review of anomaly based intrusion detection systems. International Journal of Computer Applications, 28(7), 26-35. https://doi.org/10.5120/3399-4730

Leszczynski, M., May, A., & Zhang, J., et al. (2020). Understanding the downstream instability of word embeddings. https://doi.org/10.48550/arxiv.2003.04983

Zhang, J., Li, Z., & Chen, S. (2020). Diversity aware-based sequential ensemble learning for robust anomaly detection. IEEE Access, 8, 42349-42363. https://doi.org/10.1109/access.2020.2976850

Zhang, T., & Zhou, Z. (2020). Optimal margin distribution machine. IEEE Transactions on Knowledge and Data Engineering, 32(6), 1143-1156. https://doi.org/10.1109/tkde.2019.2897662

.avif)

Comments